Sakana AI Series B Raises ¥20B to Fuel Japan-Focused LLMs

Tokyo-based Sakana AI has closed a significant Series B financing round, securing ¥20 billion (roughly $135 million) and lifting its post-money valuation. The capital will accelerate R&D, model development and commercial expansion for Japan-focused large language models (LLMs) that are optimized for local data, language nuance, and cultural context.

What does Sakana AI’s ¥20B Series B mean for Japan’s sovereign AI?

Sakana’s new funding amplifies a broader trend: while global cloud and AI giants compete on scale, an ecosystem of specialist startups is prioritizing localization, data sovereignty, and efficiency. For Japan, that means more AI models and solutions engineered to perform well with smaller datasets, integrate Japanese cultural context, and meet local enterprise and regulatory requirements.

Why localized LLMs matter

Language, culture, and performance with small datasets

Large multilingual LLMs are powerful, but they can struggle with local idioms, regulatory nuance, niche domain language, or areas where high-quality data is sparse. Sakana AI’s approach focuses on model architectures and training pipelines that get more value from smaller, higher-quality Japanese datasets. That makes the models more accurate and cost-effective for customers who need tailored behavior rather than one-size-fits-all capabilities.

High-quality data and curation are essential to this strategy — teams that invest in data engineering and domain labeling can squeeze outsized performance from compact models. For more on why quality data matters for AI model performance, see our deep dive: The Role of High-Quality Data in Advancing AI Models.

Sovereignty, security, and enterprise trust

Public-sector and regulated private-sector buyers increasingly prioritize sovereign AI: models and systems that reflect national values, local privacy requirements, and onshore data controls. Sakana’s pitch centers on building Japan-centric models that enterprises and government customers can adopt without the same dependency on foreign-hosted models or unclear supply chains.

How Sakana plans to use the capital

The company says the Series B proceeds will be allocated across core areas:

- R&D and next-generation LLM development tailored to Japanese language and culture

- Expanding engineering and product teams focused on inference efficiency and deployment

- Building sales, distribution, and enterprise support to scale commercial adoption in finance, manufacturing, and government

Optimizing inference and deployment pipelines will be key to keeping costs competitive and enabling real-time applications. Techniques like model distillation, compiler and runtime tuning, and KV cache optimizations can dramatically lower latency and cost—topics we’ve covered in our analysis of inference efficiency: AI Inference Optimization: Compiler Tuning for GPUs.

Who’s backing Sakana and why investor mix matters

The round included a mix of domestic financial institutions and global venture firms. That combination underscores two strategic signals: local banks and corporate investors can help with enterprise adoption and regulatory navigation in Japan, while global funds validate international growth potential and provide access to cross-border networks.

What industries Sakana is targeting

Sakana has already shown traction with Japanese enterprises and plans to expand enterprise offerings beyond finance into industrial, manufacturing, and government sectors. The team is also exploring opportunities in defense and intelligence spaces — areas that often require additional security, compliance, and sometimes government clearances.

Sector use cases include:

- Finance: localized compliance monitoring, risk analytics, and customer-facing assistants tailored to Japanese regulatory language and processes

- Manufacturing: knowledge capture, maintenance playbooks, and multilingual documentation tools that handle technical Japanese accurately

- Government: secure onshore LLM deployments for document summarization, translation, and citizen services

How this fits into the broader AI landscape

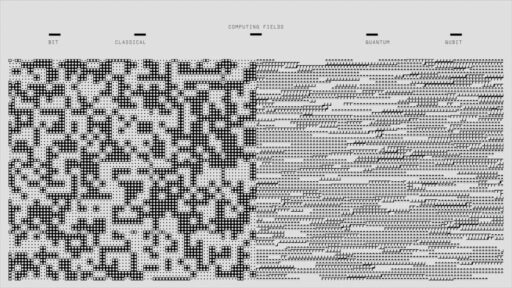

Sakana’s strategy exemplifies a growing divergence in the market: one track emphasizes ever-larger, general-purpose models, while the other prioritizes domain specialization, localization, and efficiency. Both approaches are complementary; specialized providers can partner with or integrate larger models where appropriate, then refine outputs for local contexts.

For a perspective on why the industry must look beyond pure scale, see our feature: The Future of AI: Beyond Scaling Large Language Models. That piece highlights how companies that focus on productization, efficient inference, and real-world workflows can capture enterprise value even in a market dominated by major model providers.

What investors and enterprises should watch

Key signals to monitor as Sakana scales:

- Model performance on real-world Japanese benchmarks and domain-specific tasks

- Cost-to-serve improvements driven by inference engineering and model optimization

- Customer success stories that demonstrate measurable ROI in regulated industries

- Partnerships with systems integrators and cloud providers that enable secure, scalable deployments

Risks and challenges

Specialist LLM vendors face several headwinds:

- Competition from global models improving their localization layers and developer tooling

- The complexity and cost of certifying models for sensitive government or defense workloads

- Ongoing regulatory scrutiny around data use, privacy, and model provenance

Navigating these risks requires strong data governance, transparent model evaluation, and partnerships that combine technical credibility with regulatory compliance.

What this means for the AI ecosystem in Japan

Sakana’s Series B is a sign that capital continues to flow to startups building regionally tailored AI solutions. For Japan, that could mean a richer set of options for enterprises seeking local expertise, better alignment between models and cultural nuances, and more pathways for secure onshore AI deployments. It also raises important questions about infrastructure and energy as AI workloads grow — topics we’ve explored in our reporting on data center demand and the environmental implications of AI: Data Center Energy Demand: How AI Centers Reshape Power Use.

Bottom line

Sakana AI’s funding round reinforces a broader market shift: value is emerging not just from model scale but from localization, data quality, and deployment efficiency. By focusing on Japan-specific LLMs, onshore trust, and enterprise workflows, Sakana aims to capture opportunities that global, generalist models may not address as effectively.

Next steps and what to watch

- Product launches and enterprise pilots across finance and manufacturing

- Demonstrable inference cost reductions and latency improvements

- Strategic partnerships and potential M&A activity that accelerate global expansion

If you follow AI investments, enterprise AI adoption, or sovereign AI strategies, Sakana’s trajectory will be an important case study in how regional specialists can scale alongside global giants.

Take action

Want to keep up with Japan’s AI ecosystem and enterprise-focused model innovation? Subscribe to Artificial Intel News for weekly analysis, or explore our reporting archives to compare regional AI approaches and infrastructure implications.

Call to action: Subscribe to Artificial Intel News for deep analysis on AI funding, model strategies, and enterprise deployments — stay ahead of the next wave of localized AI innovation.