Runway AI Video Generation: What the $315M Series E Means for World Models

Runway has announced a $315 million Series E financing that values the company at roughly $5.3 billion, a move that sets the stage for aggressive expansion of its video-generation capabilities and world-model research. The raise funds plans to pre-train next-generation world models, scale compute and infrastructure, and accelerate productization across media, gaming, robotics, and other industries. In this report, we parse what the funding means for the technology, the market, and the practical risks and opportunities for enterprises and creators.

Why Runway’s raise matters for AI video generation

The capital infusion is more than a headline number: it signals investor conviction in generative video and in the importance of world models — internal AI representations that simulate environments to anticipate future states and plan actions. Runway’s work has concentrated on physics-aware approaches to video generation, which help the models maintain consistency in motion, object interactions, and scene composition. By investing in world-model pre-training, Runway aims to push past current limitations of short-form, single-shot video generation and deliver multi-shot, long-form, and interactive outputs that stay coherent over time.

Gen 4.5: What it brings to creators and developers

Runway’s latest generation model, Gen 4.5, introduced several capabilities that have helped the company gain traction with both creators and technologists. Key features include:

- High-definition text-to-video generation with native audio support.

- Long-form and multi-shot generation to create coherent sequences across scenes.

- Character consistency mechanisms to preserve identity and appearance over time.

- Advanced editing tools that enable frame-level refinement and targeted changes.

These capabilities move text-to-video closer to production workflows in film, advertising, and interactive media. For studios and agencies, the ability to generate longer, higher-fidelity sequences with consistent characters reduces manual post-production work and opens up iterative creative workflows that were previously infeasible.

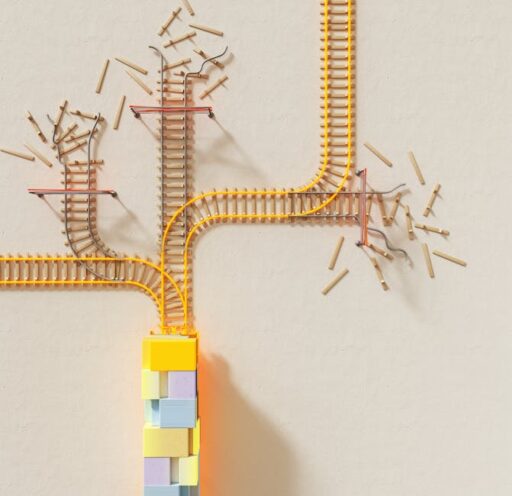

How do world models work, and why do they matter?

World models are AI systems that build internal representations of environments so they can reason about dynamics, plan future events, and simulate outcomes. In practice, they combine spatial, temporal, and physical priors with learned generative components. For video, this means the model doesn’t merely stitch frames together; it understands how objects move, how lighting evolves over time, and how actions lead to consequences. That deeper representation is essential for scaling from single-shot clips to multi-shot narratives, consistent character behavior, and agent-driven simulations used in gaming and robotics.

Featured snippet: What are world models?

A world model is an AI that learns an internal simulation of an environment so it can predict future states, plan actions, and generate coherent long-term outputs across time and space.

Where adoption is accelerating: media, gaming, robotics, and beyond

Runway built an early customer base in media, entertainment, and advertising. With Gen 4.5 and stronger world-model capabilities, the company is positioning the technology for adjacent industries:

- Entertainment and advertising: faster iterations and lower production costs for commercials, concept reels, and previsualization.

- Gaming: procedural content generation, cinematics, and NPC behaviors that scale with developer input.

- Robotics: simulated training environments and motion planning that benefit from physics-aware video models.

- Healthcare and scientific visualization: simulated scenarios for medical training, procedure walkthroughs, and complex visual explanations.

These use cases show how a single underlying capability — consistent, long-form visual simulation — can unlock new product categories beyond consumer media.

Is Runway keeping up with infrastructure demands?

Generative video and world-model pre-training are compute intensive. Runway has expanded infrastructure partnerships to increase capacity and lower latency for large-scale training and serving. Strategic compute agreements and investments that scale GPU fleets, storage, and mixed-precision pipelines are a prerequisite to commercial viability. As a practical matter, robust infrastructure helps reassure enterprise customers that models can be trained, fine-tuned, and served reliably at scale.

For readers tracking the compute and investment landscape, the growing nexus between AI startups and cloud/compute providers has been a recurring theme — including major investments aimed at scaling data-center capacity for AI workloads.

Competition and the broader ecosystem

Runway operates in a crowded field of labs pursuing high-fidelity video synthesis, multimodal reasoning, and world models. Competitive differentiation will hinge on a few factors:

- Model quality and benchmarks: demonstrable improvements on fidelity, temporal coherence, and benchmarked evaluations.

- Tooling and editing workflows: how easily creators can integrate generated content into existing pipelines.

- Vertical integrations and partnerships: tie-ins with media platforms, game engines, and robotics stacks.

- Safety, provenance, and content moderation: mechanisms to prevent misuse and to label generated media.

Runway’s emphasis on character consistency, native audio, and multi-shot generation addresses several core pain points; however, rivals continue iterating rapidly. Organizations evaluating vendors should benchmark models on end-to-end workflows rather than single-shot sample outputs.

Ethics, safety, and content provenance

As generative video quality improves, ethical questions escalate. Deepfakes, non-consensual imagery, and synthetic media used for deception are real risks. Companies and platforms that host or distribute generated video must adopt technical and policy safeguards: robust watermarking, provenance metadata, content moderation pipelines, and transparent user agreements. The industry continues to debate optimal combinations of regulation, platform policy, and technical mitigation.

For further reading on the responsibility around synthetic media, see reporting on nonconsensual deepfakes and platform duties to curb abuse.

Operational challenges and technical bottlenecks

Several constraints temper rapid commercial adoption:

- Compute costs: Training world models at scale demands GPU clusters and optimized pipelines.

- Data quality: High-fidelity video datasets with diversity, annotations, and long-form continuity are scarce and expensive to curate.

- Evaluation: Measuring temporal coherence and semantic correctness across long sequences requires new metrics.

- Regulatory risk: Privacy and intellectual property questions around training data can lead to legal exposure.

Addressing these bottlenecks often requires vertical integration — combining model research, dataset curation, infrastructure partnerships, and product design — which Runway’s funding will be used to accelerate.

Practical guidance: How enterprises should approach Runway-style video models

Organizations experimenting with generative video and world models should adopt a staged approach:

- Pilot with clearly defined ROI: Start with proof-of-concept projects with measurable business outcomes, such as marketing A/B tests or in-game cinematic production.

- Focus on integration: Ensure generated assets fit into existing editing, asset management, and VFX pipelines.

- Assess safety and provenance: Require watermarking and traceability for any synthetic content used in customer-facing contexts.

- Plan for compute: Budget for training and inference costs, or negotiate managed compute agreements with vendors.

Enterprises should also monitor adjacent developments in the industry that enable scaling and reduce risk. For instance, recent capital flows and infrastructure deals have reshaped how startups access cloud GPUs and specialized hardware.

Readers interested in the enterprise infrastructure angle may find this analysis on major compute investments and partnerships helpful for context.

What to watch next

- How widely Gen 4.5 is adopted in production pipelines across studios and game developers.

- Progress on robust watermarking and provenance standards for generated video.

- New benchmarks that evaluate long-form temporal coherence and character consistency.

- Commercial partnerships that place generative video into enterprise SaaS stacks or game engines.

Runway’s planned hiring push across research, engineering, and go-to-market teams will be a bellwether for how fast the company intends to move from research to enterprise deployments.

Conclusion: Runway’s $315M is a bet on scalable, physics-aware visual AI

The Series E financing gives Runway runway — in both the figurative and literal sense — to push world-model research into practical products. If the company can translate pre-training advances into stable, auditable, and high-quality outputs, generative video will migrate from novelty demos to core production tools across media, gaming, and simulation-driven industries. At the same time, technical and ethical challenges remain significant, and success will depend on product integrations, responsible deployment, and sustainable infrastructure partnerships.

Further reading

- Higgsfield Raises $130M: AI Video Generation Boom in 2026 — context on the broader video-generation market.

- Nvidia Investment in CoreWeave: $2B to Scale AI Data Centers — analysis of compute investments that support generative startups.

- Stopping Nonconsensual Deepfakes: Platforms’ Duty Now — on ethical and policy frameworks for synthetic media.

Call to action

Curious how world-model-driven video could impact your organization? Contact our editorial team to request an enterprise briefing or to discuss a demo with vendors building production-grade generative video tools. Stay informed by subscribing for weekly analysis of AI infrastructure, models, and product implications.