Prism: An AI Scientific Workspace to Accelerate Research with GPT-5.2

OpenAI has introduced Prism, a free AI scientific workspace available to anyone with a ChatGPT account. Designed as an AI-enhanced word processor and research companion, Prism pairs powerful language and multimodal reasoning with familiar academic workflows—LaTeX typesetting, diagram building, and project-aware discussion windows—to help researchers draft, review, and organize scientific work more efficiently. Importantly, Prism is built to augment human scientists rather than replace them, emphasizing verification, reproducibility, and human oversight.

What is Prism and how does it help researchers?

In short: Prism is a project-centric workspace that integrates GPT-5.2 capabilities directly into the research writing pipeline. It offers an environment where researchers can:

- Draft and revise manuscripts with AI-assisted prose editing grounded in the context of a research project.

- Search and summarize prior literature and assess claims with model-assisted checks.

- Compose technical documents using LaTeX with improved tooling and visual diagram assembly from whiteboard-style inputs.

Because Prism connects the model to the entire project context, responses are tailored to the current manuscript, figures, and notes—reducing repetition and improving coherence across a research lifecycle.

Core features of the workspace

Seamless LaTeX integration

Prism integrates LaTeX editing and compilation directly into the workspace, enabling researchers to write equations, format citations, and prepare typeset-ready documents without switching tools. This integration speeds editing cycles and reduces friction when moving between draft text and final output.

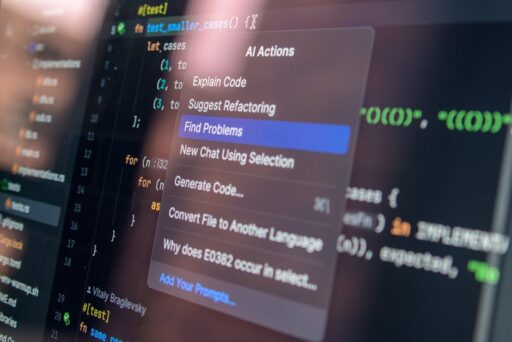

Project-aware Chat with GPT-5.2

When researchers open a ChatGPT window inside Prism, the assistant can access the project context—draft sections, references, and figure captions—so answers are relevant to the manuscript at hand. Because the assistant is aware of the surrounding material, it can propose targeted rewrites, suggest structural edits, or point to missing references.

Visual diagram assembly

Prism leverages GPT-5.2’s visual and multimodal strengths to let users convert sketches or whiteboard drawings into clean diagrams suitable for papers and presentations. That capability addresses a frequent pain point in academic workflows: turning conceptual sketches into publication-quality figures.

Claim assessment and reproducibility aids

Prism provides model-assisted checks that help identify unsupported claims and suggest how to make assertions replicable—by recommending experiments, statistical checks, or specific citations to verify statements. The tool emphasizes traceability: every suggested change can be linked back to project context or source material for easier verification by human collaborators.

How to use Prism effectively: practical steps

Adopting Prism successfully requires pairing its suggestions with rigorous scientific practice. A simple workflow:

- Create a project and upload your draft, references, and raw data or diagrams.

- Use the project-aware chat to ask for targeted edits: clarity, concision, or alternative phrasings for specific sections.

- Run claim-assessment prompts to surface unsupported statements, then annotate or add citations where needed.

- Convert sketches into diagrams and integrate LaTeX-rendered equations directly into the draft.

- Export a compiled manuscript and share with coauthors for standard peer review and reproducibility checks.

This sequence preserves human control while using AI to speed repetitive or time-consuming tasks.

What are the benefits and limitations of Prism?

Benefits

- Faster drafting and iteration: AI-assisted edits reduce time spent polishing prose and structuring sections.

- Improved reproducibility: built-in context linking and exportable notes help capture the rationale behind edits and hypotheses.

- Integrated tooling: combining LaTeX, diagram creation, and AI feedback in one workspace reduces context switching.

Limitations and necessary safeguards

Prism is not an autonomous researcher. It can suggest proofs, hypotheses, or citations, but those outputs require careful human validation. Known limitations include:

- Hallucinated citations or overstated claims—model recommendations must be checked against primary sources.

- Domain-specific nuance—models may produce plausible-sounding text that lacks technical correctness.

- Data governance concerns—sensitive or embargoed data should be handled under existing institutional policies.

Security and oversight

As teams adopt project-aware AI tools, controls around agentic behavior, access management, and audit trails become essential. Researchers should combine Prism with lab-level governance, reproducibility protocols, and manual verification workflows to prevent misuse or inadvertent disclosure. For broader context on preventing rogue agents and securing AI-driven workflows, see our analysis on Agentic AI Security.

How does Prism relate to recent advances in AI reasoning?

Prism leverages GPT-5.2’s advanced reasoning and multimodal capabilities. For readers tracking model releases and technical evolution, see our coverage of the GPT-5.2 release and its implications for scientific workflows. Progress in machine-aided mathematical reasoning—where models have assisted in literature review and in verifying or exploring proofs—offers a preview of how human-AI research collaboration can scale. For examples of how models contributed to mathematical progress and formal verification, consult our piece on AI mathematical reasoning advances.

What should researchers ask before adopting Prism?

Researchers should evaluate Prism against practical concerns and institutional policies. Key questions to consider:

- How will Prism’s outputs be archived, cited, and attributed in manuscripts?

- What verification steps are required before accepting a model-suggested claim?

- How are data privacy and IP managed when project files are hosted in the workspace?

- What access controls and collaborator permissions are available for multi-author projects?

Answering these questions early helps integrate Prism into existing lab and publication practices while minimizing downstream friction.

Best practices for human-AI collaboration in research

To benefit from Prism and similar AI research tools while managing risk, teams should adopt concrete practices:

- Annotate every model-suggested change with source checks and human sign-off.

- Keep raw data and analysis scripts in version-controlled repositories outside the workspace when required by policy.

- Use AI recommendations as starting points, not definitive results—especially for experimental design and statistical interpretation.

- Train lab members on common model failure modes (e.g., hallucinations, overconfidence) so they can spot mistakes quickly.

Practical scenarios: where Prism adds value

Prism is particularly useful in these scenarios:

- Early drafting: converting bullet outlines into coherent sections and suggested introductions or conclusions.

- Cross-disciplinary projects: summarizing literature in auxiliary fields to make papers accessible to broader audiences.

- Proof exploration: helping structure formal arguments and locating relevant prior work for follow-up verification.

- Layout and figures: turning whiteboard diagrams into publication-ready images and integrating them into LaTeX documents.

Implications for research culture and publishing

Tools like Prism can shift how labs operate: faster iteration cycles, more polished first drafts, and broader use of reproducibility scaffolding. Publishers and reviewers will need to adapt guidelines to capture AI contributions, require clear attribution for model-assisted content, and enforce replicability standards for experiments suggested or analyzed with AI support.

Conclusion: Prism as an accelerator, not a replacement

Prism packages advanced model capabilities into a focused research workspace, reducing friction across writing, diagramming, and context-aware discussions. By integrating LaTeX, multimodal diagram tools, and GPT-5.2 reasoning within a single environment, Prism can speed many routine tasks that slow scientific progress. However, the value of the tool depends on human oversight: verification, reproducibility practices, and ethical governance remain essential.

If you lead or participate in research projects, evaluate how Prism can fit into your lab’s workflows, pilot it on low-risk drafts, and pair model suggestions with formal verification steps. For deeper technical context on the models powering these features, read our coverage of GPT-5.2 and the work on AI mathematical reasoning.

Get started with Prism

Try Prism with a sample draft to explore how project-aware AI can speed your workflow. Start by importing a short manuscript, enable project-aware chat, ask the assistant to summarize related work, and iterate with LaTeX-rendered edits. Share early drafts with collaborators and document every AI-suggested change for reproducibility.

Call to action: Ready to streamline your research writing? Create a Prism project, experiment with model-assisted edits, and share your experience with peers—then incorporate verified AI-derived insights into your next submission.