Kimi K2.5: A New Open-Source Multimodal Model Optimized for Coding and Video

A Chinese AI startup has released Kimi K2.5, a native multimodal model that processes text, images and video. Trained on roughly 15 trillion mixed visual and text tokens, Kimi K2.5 is being positioned as an open-source alternative that targets developer workflows, coding tasks and complex multimodal reasoning over video. Early benchmarks show the model performs competitively with leading proprietary systems and, in some tests, surpasses them.

What is Kimi K2.5 and why it matters

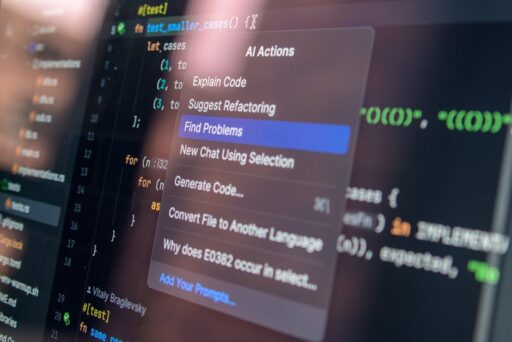

Kimi K2.5 is designed from the ground up to be multimodal — not as an add-on to a text-only model. That native design means the model can consume and reason about images and videos alongside text, enabling use cases that combine visual context with code generation and natural-language instructions. The release also includes Kimi Code, an open-source coding tool that brings these multimodal capabilities directly into developer environments like terminals and popular editors.

What makes Kimi K2.5 different from other multimodal models?

Kimi K2.5 differentiates itself in three practical ways:

- Native multimodality: trained on a massive mix of textual and visual tokens, so image/video understanding is integral rather than bolted on.

- Developer-first tooling: Kimi Code is released alongside the model to let engineers use images and videos as inputs for coding tasks.

- Agent orchestration: the model is tuned for multi-agent workflows—scenarios where several agents coordinate to solve a task.

Technical deep dive

Training data and scale

The team reports training Kimi K2.5 on about 15 trillion mixed visual and text tokens. That scale is intended to ensure the model sees a broad variety of paired and unpaired modalities during training, improving cross-modal alignment and the ability to reason about temporal visual inputs such as video.

Architecture notes

While full architecture specifics are not exhaustively documented in the release, Kimi K2.5 follows the multimodal transformer paradigm with adaptations for efficient video processing and token fusion. The emphasis is on contextual grounding: code and instructions are generated with direct reference to visual inputs when provided.

Agent swarms and orchestration

One stated use case for Kimi K2.5 is orchestrating multiple agents that collaborate on complex workflows—what the company describes as “agent swarms.” These setups typically involve smaller specialized agents for tasks like data extraction, code synthesis, testing and UI generation that coordinate to complete an end-to-end project.

For context on multi-agent systems and how they’re evolving, see our coverage of broader agent toolsets in Airtable Superagent: The Future of Multi-Agent AI Tools.

Benchmarks: Where Kimi K2.5 stands

Independent benchmark results shared by the developer show Kimi K2.5 delivering strong performance across coding and video-understanding tasks. Notable highlights include:

- Leading scores on several coding benchmarks, including higher marks on verified and multilingual developer tests.

- Top-tier performance on video reasoning evaluations that measure cross-modal temporal understanding and complex inference.

- Competitive parity with major proprietary systems across a broad range of language and multimodal tasks.

These results suggest Kimi K2.5 has been tuned specifically for practical engineering scenarios where code quality, correctness and contextual understanding of visual inputs matter.

For readers tracking other top multimodal releases, Kimi K2.5’s arrival sits alongside recent model launches and platform improvements such as Gemini 3 Flash and large reasoning models like those covered in our piece on GPT-5.2-powered AI workspaces.

Kimi Code: Bringing multimodal coding to developers

Kimi Code is an open-source developer tool released alongside the model that allows code generation and editing using text, image and video inputs. The tool is available as a command-line interface and can integrate with popular editors and development tools. Kimi Code highlights include:

- Terminal-first workflows for quick iteration and scripting.

- Editor integrations (VSCode, Cursor, Zed and others) to embed multimodal prompts directly into the development loop.

- Support for images and videos as inputs, enabling tasks like reproducing UI layouts and generating code from visual prototypes.

These features position Kimi Code as a direct competitor to other AI-driven developer tools, especially for teams that want open-source alternatives with strong visual-to-code capabilities.

Use cases: Where Kimi K2.5 can be applied

Practical applications for Kimi K2.5 include:

- Visual UI replication — feed screenshots or short screen recordings and ask the model to generate matching front-end code.

- Video-based QA and summarization — extracting structured insights from instructional or surveillance video.

- Multilingual coding assistance — generate and verify code across languages and frameworks.

- Coordinated agent workflows — orchestrate specialist agents for end-to-end feature development and testing.

Limitations and considerations

No model is perfect, and responsible adoption requires awareness of limitations:

- Data provenance: With models trained on massive mixed datasets, transparency about the datasets and filtering processes remains crucial.

- Hallucinations: Even strong multimodal models can generate plausible-sounding but incorrect code or misinterpret visual context without careful prompts and verification.

- Evaluation variability: Benchmark wins on specific datasets don’t guarantee uniform superiority across all real-world tasks.

Developers should build robust testing and human-in-the-loop review into any production deployment of generative code or automated agent orchestration.

How this release affects the AI tooling market

Kimi K2.5’s open-source approach and developer tooling accelerate competition in the AI coding and multimodal space. Coding assistants and CLI-integrated developer tools have quickly become significant business lines for model providers, and open-source alternatives can reshape adoption by lowering barriers to experimentation.

We’ve seen rapid growth in monetization around coding tools in the industry; powerful open-source stacks like Kimi K2.5 plus Kimi Code could shift adoption patterns toward hybrid models where companies combine self-hosted components with cloud services.

Is Kimi K2.5 ready for production?

Kimi K2.5 shows strong technical promise and practical features, but production readiness depends on your organization’s risk tolerance and verification processes. Recommended steps before broad rollout:

- Run captive benchmarks using your own datasets and use cases.

- Implement deterministic testing and code-review workflows for any AI-generated code.

- Audit and sanitize inputs when dealing with user-submitted images or videos to avoid privacy or safety issues.

Next steps for developers and teams

If you’re interested in experimenting with Kimi K2.5 and Kimi Code, consider starting with a small pilot that focuses on one of the model’s clear strengths — for example, UI reproduction from screenshots or video-assisted bug triage. Integrate human verification from the outset and compare outputs directly against other tools in your stack.

Conclusion

Kimi K2.5 introduces a capable, open-source multimodal option that targets real developer pain points: generating reliable code from mixed inputs and enabling agent-based orchestration for complex workflows. Its competitive benchmark performance and the inclusion of Kimi Code make it a noteworthy entrant in the fast-moving space of multimodal models and AI developer tools.

Explore how multimodal AI is transforming workflows across industries in related coverage, and watch how open-source projects like Kimi K2.5 shift the balance between proprietary platforms and community-driven tooling.

Call to action

Want hands-on guidance on evaluating Kimi K2.5 for your team? Subscribe to Artificial Intel News for in-depth tutorials, pilot playbooks and comparative benchmarks, or contact our editorial team to request a practical evaluation guide tailored to your developer stack.