Is the LLM Bubble Bursting? What Comes Next for AI

Large language models (LLMs) have dominated headlines, investment rounds, and enterprise pilots for the past few years. But as attention concentrates on these general-purpose giants, an important debate is emerging: are we witnessing an LLM bubble—a surge of capital and expectations focused narrowly on one family of models—that could deflate soon?

Why experts call it an “LLM bubble”

The term “LLM bubble” captures three connected trends: disproportionate funding for general-purpose LLMs, public and investor expectations that one model can solve many problems, and the operational cost to train and serve ever-larger models. Those trends have produced rapid innovation, but they also create risks when a single approach is treated as a catch-all solution.

Key drivers behind the phenomenon:

- Concentration of capital and compute on a few large models.

- Media and product hype that equates LLM capability with universal AI progress.

- Enterprise trials that underestimate latency, cost, privacy, and customization needs.

What happens if the LLM bubble bursts?

Short-term, a re-rating of companies built primarily on LLM hype is possible. Investors and customers may shift budgets toward more pragmatic and specialized solutions. But a cooling of investor enthusiasm for one class of models doesn’t mean AI innovation will slow. Instead, the market is likely to rebalance toward diversity in model types, deployment patterns, and vertical use cases.

Immediate effects to expect

- Reduced valuations for players relying solely on large, monolithic LLM architectures.

- Acceleration of work on smaller, task-specific models that are cheaper and easier to run.

- Greater emphasis on data quality, model evaluation, and measurable ROI.

Why the industry won’t collapse

AI is not synonymous with any single architecture. The ecosystem includes vision, speech, structured predictors, domain-specific models, and hybrid systems combining symbolic methods and learned components. Even if the LLM craze cools, the underlying demand for automation, decision support, and intelligent interfaces will sustain growth across other approaches.

How specialized models will reshape adoption

Practitioners are increasingly recognizing that the right model depends on the job. For many enterprise use cases, an extremely large general-purpose LLM is overkill:

- Customer service bots need domain knowledge, privacy controls, and low latency more than broad creativity.

- High-frequency applications (e.g., trading signals, real-time monitoring) require tiny, deterministic models.

- Regulated sectors demand explainability and audit trails that are easier with narrower architectures.

Smaller, specialized models deliver several practical benefits: lower inference cost, faster response times, easier compliance, and the ability to run on-premises or in constrained cloud environments. Enterprises that prioritize these attributes will likely achieve better ROI than organizations chasing marginal improvements from larger LLMs.

How can organizations adapt? (Step-by-step guide)

Enterprises should adopt a pragmatic, multi-model strategy instead of a single-model bet. Below is an actionable sequence leaders can follow:

- Audit use cases to classify by performance, latency, privacy, and interpretability needs.

- Map each use case to an appropriate model family—LLM, vision model, audio model, or specialized rule-augmented systems.

- Prototype with lightweight models in production-like conditions to validate cost and latency assumptions.

- Invest in tooling for model orchestration, monitoring, and fine-tuning across multiple model types.

- Measure outcomes by business metrics, not model size or training compute.

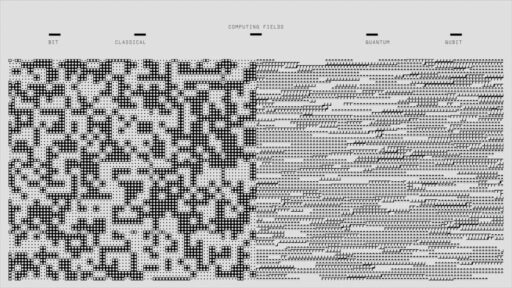

What technical shifts will accelerate the move away from LLM monoculture?

Several technical trends reinforce the transition to model diversity:

- Advances in model compression, quantization, and distillation that preserve accuracy while shrinking models.

- Better domain adaptation techniques that enable small models to absorb specialist knowledge efficiently.

- Hybrid architectures that combine retrieval, symbolic rules, and lightweight neural modules for predictable behavior.

- Infrastructure innovations that lower the cost of distributed inference for many small models.

Related research and deployments

Coverage across AI disciplines suggests the future will be heterogeneous. For perspective on how thinking beyond mere scale matters, see our analysis on The Future of AI: Beyond Scaling Large Language Models. For work focused on model efficiency and new architectures, check our piece on Diffusion-Based LLMs: A Faster, Cheaper Way to Code. And for how memory and persistent context will change app design, consult AI Memory Systems: The Next Frontier for LLMs and Apps.

Will smaller models displace LLMs entirely?

No. Rather than displacement, expect specialization. LLMs retain unique strengths—broad knowledge, few-shot learning, and flexible language generation—that will continue to power research, creative workflows, and tasks where versatility matters. But production-grade systems will increasingly combine LLMs with targeted components to optimize cost, latency, and compliance.

Where LLMs remain the best fit

- Exploratory research and multi-domain prototypes.

- Tasks requiring open-ended generation or complex synthesis across topics.

- Scenarios where human oversight is always present and the premium on raw capability outweighs cost.

Operational lessons for builders and investors

Organizations that build long-term will be distinguished by capital efficiency, measurement rigor, and modular system design. Practical practices include:

- Prioritizing product-market fit over architecture-first design.

- Tracking cost-per-successful-inference and other unit economics, not just model benchmarks.

- Designing for model interchangeability so teams can swap in specialized models as needs evolve.

Investor perspective

Investors will likely favor businesses demonstrating sustainable economics and clear paths to profitable deployment. That does not eliminate high-growth bets, but it increases scrutiny around whether a model-centric story translates into real-world revenue and defensibility.

How to spot high-quality, non-speculative AI bets

Look for companies that combine these attributes:

- Clear ROI metrics and customer references.

- Low marginal inference cost or viable on-prem deployment options.

- Domain expertise embedded in model training and evaluation.

- Robust observability and model governance practices.

FAQ: What should product and engineering teams do now?

1. Should we stop using LLMs?

No. Evaluate LLMs against specific product metrics. Use them where their strengths materially improve user outcomes and back them with fallbacks or guardrails for cost and safety.

2. When should we prefer a smaller, specialized model?

If latency, cost, privacy, or predictable outputs are primary constraints, a smaller model is often superior. Pilot both approaches and measure business KPIs.

3. How should teams prepare for a multi-model future?

Invest in orchestration, versioning, and monitoring systems that treat models as interchangeable services. Prioritize data pipelines and evaluation benchmarks aligned to business outcomes.

Conclusion: A diversified model economy

Talk of an “LLM bubble” is not a death knell for AI. It’s a market signal prompting a shift from scale-for-scale’s-sake to pragmatic, use-case-driven engineering. The years ahead will likely feature a richer ecosystem: compact specialist models, efficient inference, hybrid systems, and improved governance. Organizations that move early to align model choice with real-world constraints will capture value while avoiding the downside of hype cycles.

Next steps (practical checklist)

- Run a use-case audit and tag each case by sensitivity, latency, and cost profile.

- Prototype with both large and small models and measure end-to-end economics.

- Invest in model orchestration and governance to enable safe experimentation.

- Educate stakeholders on realistic timelines and ROI expectations for AI projects.

If you want to dive deeper into how to architect for a post-LLM monoculture, subscribe to Artificial Intel News for ongoing analysis and hands-on guides. Ready to reassess your AI strategy? Contact our editorial team for a tailored framework to evaluate model fit and deployment options. Take action now and build AI systems that are resilient, efficient, and aligned to business outcomes.