How AI Coding Tools Impact Open Source Software

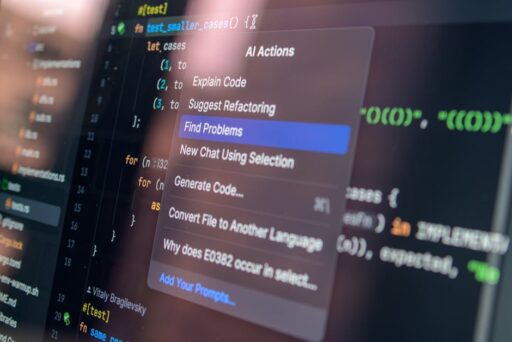

AI-assisted coding is transforming how software gets written. Large language models and other AI coding systems can generate prototypes, scaffold features, and speed routine tasks. For open source projects, these tools promise faster contribution and broader participation — yet they also introduce new risks: lower-quality submissions, reviewer overload, and growing maintenance debt. This article unpacks how AI coding tools are affecting open source ecosystems, summarizes evidence from real projects, and offers practical policies and tooling strategies maintainers can adopt.

How are AI coding tools changing open source contributions?

Short answer: they speed creation but can erode contribution quality. AI tools lower the barrier to producing code, enabling more people to generate pull requests, bug fixes, or feature patches. That increase in quantity does not always equate to quality.

What maintainers are seeing in practice

Across multiple well-known projects, maintainers report a surge of low-quality contributions that require significant reviewer time. Examples include:

- Merge requests that compile but do not follow project architecture or testing conventions.

- Security reports or bug reports that lack reproducible steps, forcing maintainers to spend time triaging noise.

- Contributions that superficially address a problem but introduce regressions or duplicate existing functionality.

These patterns are visible in media and community discussions: projects with large codebases and limited maintainer bandwidth are especially vulnerable to being overwhelmed by automated or AI-assisted submissions.

Why does AI-generated code often create more work for maintainers?

At scale, AI changes the contributing equation. Consider two core dynamics:

- Quantity increases faster than experienced contributors. AI lets novices assemble patches quickly, but it does not substitute for deep familiarity with a codebase.

- Complexity compounds. Modern software systems have many interdependencies; naive changes can ripple and cause subtle failures across modules.

In short: AI tools make it easier to produce code, but managing software complexity remains a human task. If engineering is defined as producing working software, AI helps. If engineering is defined as maintaining long-lived, robust systems, AI can make the job harder by multiplying surface-level contributions that require careful review.

Evidence from projects and maintainers

Maintainers from established open source projects have reported that LLM-assisted contributions often require more review time. For some codebases, the perceived drop in average submission quality has led teams to rethink contribution policies and to build additional automation and gating mechanisms to protect reviewer time and project stability.

What are the concrete risks and benefits?

Below is a concise pros-and-cons view to help maintainers and community managers plan strategy.

- Benefits

- Faster prototyping and scaffolding of features.

- Improved developer productivity for experienced contributors.

- Lower barrier for newcomers to experiment and learn.

- Risks

- Flood of low-quality or inconsistent contributions.

- Increased reviewer workload and burnout.

- Potential for introduced security regressions or licensing issues.

- Fragmentation: many small, incompatible forks or duplicated efforts.

How can open source projects manage the AI-driven contribution flood?

Projects can be proactive. The goal is not to ban AI tools, but to harness them safely and to maintain high code quality and project health. Consider this prioritized list of actions:

- Define contribution policies that explicitly address AI-assisted code. Clarify whether generated code is allowed, whether authors must declare AI assistance, and how licensing/attribution should be handled.

- Raise the signal-to-noise ratio with lightweight gating. Require contributors to complete a short verification (e.g., link to a verified account, required tests passing) before automated merging or review prioritization.

- Automate quality checks. Expand continuous integration to include static analysis, security scanners, dependency checks, and style enforcement so that many low-quality PRs are filtered automatically.

- Increase review capacity thoughtfully. Recruit and empower a broader set of maintainers, rotate review tasks, and document common review patterns to reduce cognitive load.

- Educate contributors. Publish clear contribution templates, examples of high-quality PRs, and guidance on how to use AI tools responsibly for your codebase.

- Introduce reputation or vouching systems. For high-traffic projects, limit merge rights or review priority to vouched contributors or those with proven track records.

Tools and policies that work

Many projects are experimenting with mechanisms that combine policy and tooling. Practical measures include:

- Mandatory test coverage thresholds for new code.

- Automated triage labels that detect generated patterns.

- Contribution review queues that prioritize reproducible bug reports and security fixes.

For maintainers aiming to stay ahead, the focus should be on systems that scale trust rather than attempting to block contributions outright.

How should projects balance innovation and stability?

Different stakeholders have different incentives. Companies may prioritize shipping features, while many open source projects emphasize long-term stability and backward compatibility. Aligning incentives requires clear governance and an explicit definition of project priorities.

Strategies for balance:

- Maintain a strict release branch with rigorous review for production builds, while allowing an experimental branch where faster, AI-assisted contributions can be iterated.

- Establish a maintainer-driven roadmap so contributions align with long-term goals rather than ad-hoc feature additions.

- Use code owners and module-level maintainers to contain risk to specific areas of the codebase.

What practical steps can maintainers implement today?

Here is a concise checklist maintainers can apply immediately:

- Create or update a CONTRIBUTING.md that includes your stance on AI-assisted code.

- Add CI gates for tests, linters, and security checks.

- Introduce a simple vouching process for trusted contributors.

- Provide example PRs and a checklist for reviewers and contributors.

- Monitor contribution metrics to detect sudden spikes that may indicate low-quality automation.

How are industry discussions framing the outlook?

Analysts and investors note that AI accelerates both code generation and the rate at which complexity grows. One common refrain is that AI does not increase the number of skilled maintainers — it empowers the ones that exist but leaves the core challenge of long-term maintenance unresolved. This suggests that investment in maintainer tooling, training, and governance will be as important as improvements to generative models themselves.

Where does this leave the future of software engineering?

Rather than the dramatic end of software engineering, the rise of AI coding tools is reshaping roles and priorities. Expect to see:

- Greater emphasis on architecture, testing, and systems thinking—skills that mitigate complexity introduced by rapid code generation.

- New roles focused on AI-augmented review, triage, and quality assurance.

- Tooling and governance innovations (for example, reputation systems and advanced CI pipelines) that scale trust across large contributor populations.

If you want a broader perspective on how AI-driven coding platforms are evolving, see reporting on vibe coding momentum and why the developer experience is changing. For context on how AI-assisted coding has reached a tipping point, read our analysis. For a forward-looking take on agentic and automated development workflows, see Agentic Software Development.

Key takeaways

- AI coding tools increase throughput but can reduce average submission quality when maintainer capacity is limited.

- Open source projects should adopt clear AI contribution policies, stronger CI, and reputation-building mechanisms to preserve signal quality.

- Long-term success will depend on scaling trust and investing in maintainer tools and education—not just model capabilities.

Ready to act? Practical next steps for your project

Start with three pragmatic moves this week:

- Update your CONTRIBUTING.md with an AI assistance disclosure and a short checklist for contributors.

- Enable CI gates for tests and static analysis on all incoming PRs.

- Set up a basic vouching or reputation process to prioritize reviewer attention.

These measures reduce reviewer friction and help your project benefit from AI-assisted development without being overwhelmed by low-quality contributions.

Call to action

Want ongoing coverage and practical guides on AI and software engineering? Subscribe to Artificial Intel News for weekly analysis, toolkit recommendations, and case studies. If you manage an open source project, share your experience in the comments — how are AI tools affecting your contribution pipeline?