Hallucinated Citations at NeurIPS: What Happened and What Comes Next

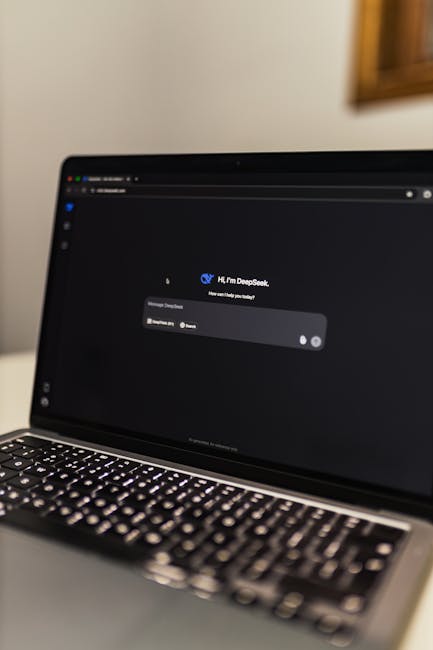

Last month, an independent AI-detection company scanned the set of papers accepted to the Conference on Neural Information Processing Systems (NeurIPS) and reported that 100 citations across 51 papers could not be verified and appear to be hallucinated or fabricated. The finding has stirred discussion across the machine learning community about the role of large language models (LLMs) in research workflows, the limits of peer review under heavy submission loads, and practical steps to preserve scholarly rigor.

What are hallucinated citations, and how common were they at NeurIPS?

Hallucinated citations are references that LLMs or other automated tools invent — they look like plausible scholarly citations but point to papers, authors, titles, or venues that do not exist. In the NeurIPS scan, 100 such citations were identified across 51 accepted papers. To put that number in context:

- Each NeurIPS paper typically includes dozens of citations, so the total corpus of references spans tens of thousands of entries.

- From a purely statistical perspective, the discovered cases represent a small fraction of the total citations reviewed.

- Nevertheless, fabricated references carry outsized consequences for credibility, reproducibility, and the scholarly record.

The discovery raises two immediate questions: are these isolated mistakes, or symptoms of a larger trend? And how do they affect the scientific value of the affected papers?

Why inaccurate citations matter

At first glance, a single incorrect reference might seem trivial: it does not prove the experiments are wrong. Yet citations are the currency of research. They document intellectual lineage, allow readers to verify prior work, and help assess impact and contribution. Fabricated citations undermine that ecosystem in several ways:

1. Erosion of verification and reproducibility

If readers or reviewers cannot find cited prior work, reproducing and contextualizing results becomes harder. Fabricated references create dead ends in literature reviews and slow down follow-up research.

2. Distorted academic metrics

Citations are used—sometimes controversially—to measure influence, distribute credit, and support hiring and funding decisions. When references are artificially created, the signal those metrics provide is diluted.

3. Review pipeline stress

Conferences like NeurIPS rely on volunteer peer reviewers who evaluate many submissions under time pressure. As submission volumes surge, reviewers may focus on core technical claims and overlook reference-level verification, especially when citations appear plausible. That dynamic can let hallucinated citations persist into the published record.

How did hallucinated citations appear in elite research papers?

There are several plausible pathways:

- Direct LLM use for drafting: Authors may use LLMs to write literature reviews or generate citation lists. If prompts are not carefully constrained or the model hallucinates, fabricated references can be introduced.

- Reference manager errors: Automated tools that try to autocomplete citations from brief prompts or titles may return incorrect matches or invent entries when confidence is low.

- Copy-editing shortcuts under time pressure: In a rush to submit or finalize, authors may accept suggested citations without verifying each entry.

These mechanisms show that hallucinated citations are less a failure of expertise and more a failure of process: relying on automation without verification creates brittle outcomes, even for world-class researchers.

Are the core research results invalidated by false citations?

Not necessarily. An incorrect reference does not automatically negate experimental results, theoretical proofs, or dataset claims. However, fabricated references complicate the task of situating a paper in prior work. At minimum, they require post-publication correction and clarification. At worst, they can mask the absence of an important supporting study or create the impression of prior consensus that did not exist.

What can researchers do to prevent hallucinated citations?

Preventing fabricated references is largely a matter of process and tooling. Practical steps include:

- Verify all citations manually before submission: open each referenced work and confirm title, authors, venue, and DOI.

- Use trusted bibliographic databases and DOIs rather than model-generated citations when possible.

- Adopt citation linting as part of the author checklist: automated scripts can flag missing DOIs, broken links, and mismatches between citation text and metadata.

- Encourage team reviews: assign a coauthor to independently check references as part of the final pass.

- If using LLMs for drafting, separate tasks: use the model to draft prose but source citations from indexed databases and bibliographic tools.

What can reviewers and program committees do?

Peer reviewers and program committees must balance thoroughness with limited time. Reasonable mitigations include:

- Clear review instructions to flag unverifiable references and mistaken citations.

- Sampling checks: for papers that are borderline or high-impact, ask reviewers to spot-check a subset of citations.

- Deploying lightweight automated checks in the submission pipeline to identify missing DOIs, suspiciously formatted references, or citations to non-indexed venues.

- Post-acceptance verification: require authors to produce a validated bibliography with DOIs and accessible links as a condition of final publication.

These process changes add friction, but they protect the integrity of the record without imposing unrealistic burdens on reviewers.

How should conferences respond to an influx of AI-generated “slop”?

Submission volumes are rising across top AI conferences, which strains reviewing capacity. The community has already debated the effects of a submission tsunami on quality and reviewer burnout. Conference organizers can take strategic steps:

- Integrate automated bibliographic validation into submission systems to catch obvious fabrication early.

- Create public guidance for authors on responsible LLM use, similar to existing policies on data and code sharing.

- Offer optional tools and templates that make correct citation practices simple (e.g., DOI-first workflows).

- Invest in reviewer training and calibration exercises that explicitly include citation verification as a review dimension.

These measures mirror broader recommendations to combat low-quality AI content and maintain a high standard of scientific publication; they align with ongoing conversations about AI slop and quality in research dissemination.

Policy implications: attribution, tools, and transparency

The incident highlights policy gaps around how authors disclose LLM assistance and how publishing platforms validate bibliographic claims. Considerations include:

- Disclosure norms: authors should state when LLMs contributed to drafting or literature synthesis and clarify how citations were sourced.

- Tool certification: publishers and conferences might maintain a registry of approved bibliographic services or citation validators.

- Retraction and correction policies: streamlined pathways to correct the scholarly record for fabricated citations without penalizing honest mistakes.

These steps promote transparency and help readers evaluate how much of a paper’s narrative was assisted by automated tools versus human-curated sources.

How should the community interpret the broader significance?

The discovery that some accepted NeurIPS papers contained hallucinated citations is a cautionary tale, not a catastrophe. It demonstrates how even expert communities are vulnerable to the subtle failures of LLMs when automation substitutes for verification. The broader lessons are:

- Automation magnifies human workflow weaknesses. If human verification is absent or perfunctory, errors will slip through at scale.

- Small error rates can have reputational consequences if concentrated in high-profile venues.

- Process reforms—rather than moralizing about tool use—are the fastest route to fixing the problem.

These conclusions fit a pattern visible across recent coverage of research practices and conference review pipelines. For broader context on trends in the field and how scaling pressures affect quality, see our analysis of AI Trends 2026.

Recommended checklist for authors, reviewers, and conference organizers

To move from diagnosis to remediation, here is a practical checklist:

- Authors: Verify all references using DOIs or publisher pages; declare any LLM assistance; maintain a versioned bibliography file.

- Coauthors: Assign one team member to audit citations before submission.

- Reviewers: Flag unverifiable or suspicious citations in reviews; request verification from authors when needed.

- Program committees: Add automated citation checks to submission portals and require a validated bibliography at camera-ready stage.

- Publishers: Provide clear correction routes and minimize friction for post-publication updates to references.

Implementing even a subset of these steps will materially reduce the incidence of hallucinated citations while preserving fast, creative uses of LLMs in drafting.

What researchers should learn from this episode

The core takeaway is procedural: openness to automation is compatible with high standards — provided automation is paired with rigorous verification practices. Even top-tier researchers can introduce mistakes when tool use outpaces process safeguards. Building simple, repeatable checks into the authoring workflow protects both the researcher and the community.

Next steps for the community

Addressing hallucinated citations is an opportunity to modernize publication practices for a world where LLMs are common writing partners. Steps we should prioritize now:

- Establish community standards for LLM disclosure in scientific writing.

- Incorporate lightweight bibliographic validation into conference submission systems.

- Share tooling and best practices across institutions so smaller labs aren’t left behind.

Doing so will preserve the speed and creativity LLMs offer while protecting the integrity of the scholarly record.

Closing thoughts and call to action

Hallucinated citations at a premier conference are a wake-up call, not a crisis. They remind us that human judgment must remain part of the research pipeline even as AI becomes a ubiquitous assistant. Authors, reviewers, and organizers all share responsibility: simple, practical process improvements can dramatically reduce error rates and preserve trust in published work.

If you’re an author: implement the checklist above before your next submission. If you’re a reviewer or organizer: advocate for citation validation in your review workflows. For more on protecting research quality from low-quality AI output, read our piece on AI slop and quality control.

Take action: Share this article with your coauthors and program committee, and start a conversation about adding bibliographic validation to your next paper submission process. Together we can keep research rigorous and reliable as tools evolve.