Gemini 3 Release: What Google’s Latest Foundation Model Means for AI

Google has rolled out Gemini 3, its most advanced foundation model to date, making it immediately accessible through the Gemini app and integrated AI search surfaces. The launch arrives amid a rapid cadence of frontier model updates across the industry, underscoring a renewed focus on deeper reasoning, developer productivity, and safer research-grade variants.

What is Gemini 3 and why it matters

Gemini 3 represents a deliberate shift toward stronger multi-step reasoning and nuanced responses. Google describes the release as a major capability step up from earlier Gemini generations. The company is also preparing a more research-oriented variant, Gemini 3 Deepthink, which will be available to high-tier subscribers after additional safety validation.

Key signals from the release include:

- Measurable gains on independent reasoning benchmarks, including a top recorded score of 37.4 on a general reasoning assessment.

- Strong human satisfaction ratings on user-led leaderboards, indicating practical improvements in real-world interactions.

- New developer-facing agentic tools that combine conversational prompts with a command-line and browser context to automate multi-pane coding workflows.

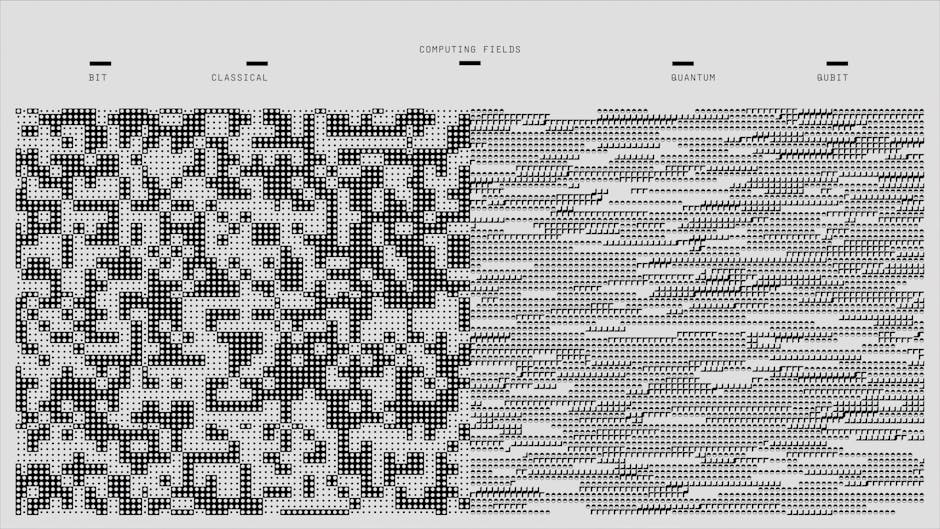

What makes Gemini 3 different from previous LLMs?

The most notable advance in Gemini 3 is its emphasis on reasoning depth rather than just scale. Rather than relying solely on parameter counts, the model architecture and training emphasis appear tuned for multi-step inference, contextual consistency, and pragmatic code generation in agentic settings.

Benchmarks and human evaluations

Independent benchmarks are already reflecting the model’s stronger reasoning profile. With a recorded score of 37.4 on a general reasoning benchmark, Gemini 3 has set a new high in tests designed to measure domain expertise and complex problem solving. It has also led human-centric satisfaction boards, suggesting that the improvements are not only academic but noticeable to end users and developers.

Research and safety posture

Google is releasing a Deepthink edition for more research-focused use cases. That variant will be subject to additional safety testing and governance checks before it reaches subscribers, reflecting a trend where companies provide both production-grade models and constrained research tiers to balance experimentation with risk mitigation.

How does Gemini 3 change developer workflows?

Alongside the base model, Google has launched an agentic coding interface—codenamed Antigravity—that fuses a conversational prompt window, a terminal, and a browser preview. This multi-pane approach allows a single agent to make edits in an editor, run commands in a terminal, and validate changes in a browser window, streamlining the development cycle in ways similar to emerging agentic IDE concepts.

Developers can expect:

- Smoother contextual handoffs between natural language intent and executable code.

- Faster iteration loops because agents can run tests and show results in-line.

- New possibilities for continuous integration driven by an AI agent that understands the full development context.

Real-world adoption signals

Google reports robust usage of the Gemini ecosystem: hundreds of millions of monthly active users in the app and millions of developers incorporating the model into workflows. Those figures indicate that Gemini 3 is likely to be evaluated not only by researchers, but by production teams that push models into consumer and enterprise products.

How will Gemini 3 impact the broader AI landscape?

The release arrives amid intense competition at the model frontier. Rapid successive updates across major labs highlight a market where capability leads can shift quickly. Practical implications include:

- Renewed pressure on rivals to prioritize reasoning and human-aligned behavior over raw scale.

- Faster adoption of agentic development tools that reduce friction between intent and implementation.

- Increased scrutiny on infrastructure and safety testing as models become more capable.

For organizations planning AI projects, this means reassessing model selection criteria: prioritize models that demonstrably handle complex reasoning and integrate safely with developer pipelines.

What use cases will benefit most from Gemini 3?

Several verticals stand to gain from enhanced reasoning capabilities:

- Knowledge work and research assistants that require synthesis of multi-step arguments.

- Software engineering, where agentic coding agents can automate repetitive tasks and validate changes.

- Customer-facing agents that must handle nuanced, multi-turn problem solving.

- Specialized domains such as law, finance, and scientific discovery where expertise and chain-of-thought reasoning are critical.

Enterprises should pilot Gemini 3 on well-scoped tasks and measure both accuracy and safety metrics before scaling integration.

How should product and engineering teams prepare?

Adopting a new high-reasoning model calls for deliberate engineering and governance steps:

- Run A/B comparisons against incumbent models using both automated benchmarks and human evaluation.

- Instrument agentic workflows to monitor for unintended actions or hallucinations.

- Layer safety filters, prompt engineering guards, and clear rollback procedures for production use.

- Engage compliance and legal teams early when deploying agents that can act across terminals and browsers.

Teams that previously explored agentic approaches or optimized inference and memory systems will find a smoother migration path; see our analysis of AI memory systems for background on stateful models and long-term context handling in applications: AI Memory Systems: The Next Frontier for LLMs and Apps.

Is Gemini 3 safe enough for research and production?

Safety is a spectrum, not a single moment. Google’s staged approach—releasing a production-ready base model while gating a more research-oriented Deepthink edition behind extra safety checks—reflects industry best practices for incremental access and monitoring. Organizations should align access levels with risk tolerance and use-case criticality.

Recommended safety steps

- Start with sandboxed experiments before any outward-facing deployments.

- Use human-in-the-loop review for high-risk outputs.

- Track model drift and performance regressions as prompt patterns change.

How does this fit into the wider trend of model development?

The Gemini 3 release reinforces several broader industry trends: capability-focused research, agentic developer tooling, and stratified model access. If you’re watching market dynamics and wondering what’s next, our recent piece on the future of scaling models offers a strategic lens on where research and product priorities are heading: The Future of AI: Beyond Scaling Large Language Models. For a view on whether the LLM surge is sustainable, see our analysis on the LLM market outlook: Is the LLM Bubble Bursting? What Comes Next for AI.

Bottom line: who should care about the Gemini 3 release?

Product leaders, platform engineers, and developers building intelligent agents should pay close attention. Gemini 3’s improvements in reasoning and the introduction of an integrated agentic coding interface lower the friction between idea and execution, creating opportunities to ship more autonomous development workflows and sophisticated end-user experiences. But with greater capability comes heightened responsibility: adopt staged rollouts and robust safety practices.

Next steps and recommended actions

To make the most of Gemini 3 while managing risk:

- Run targeted pilots that compare Gemini 3 to your current models on task-specific benchmarks and user satisfaction.

- Evaluate the agentic tooling on non-production projects to refine guardrails and integration patterns.

- Design monitoring and human review for any high-impact deployment.

Conclusion — a pragmatic view of capability and caution

Gemini 3 is a clear signal that frontier models are shifting emphasis toward deeper reasoning and agentic workflows. Organizations that combine experimentation with rigorous safety practices can extract practical value quickly, while those that move too fast without governance risk downstream harms. The immediate opportunity is to build smarter, more autonomous developer experiences while investing in the controls that make them sustainable.

Call to action

Want help evaluating Gemini 3 for your product or engineering stack? Subscribe to Artificial Intel News for ongoing analysis, benchmarking guides, and implementation best practices — and download our checklist for safe agentic AI deployments to get started.