Enterprise AI Intelligence Layer: Why neutral AI infrastructure matters

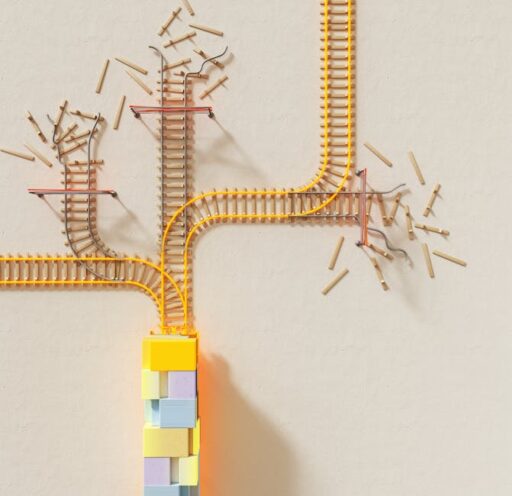

The battle for enterprise AI has moved beyond flashy assistants and single-vendor chatbots. Organizations now need an underlying intelligence layer — a neutral middleware that connects large language models and generative systems to corporate data, applications, and access controls. This layer is not just another chatbot. It is the connective tissue that enables secure, auditable, and flexible AI at scale.

What is an enterprise AI intelligence layer?

An enterprise AI intelligence layer is a software tier that mediates between general AI models and a company’s internal systems. It maps people, permissions, and workflows; integrates deeply with SaaS tools and data stores; routes requests to the right models; and enforces governance and citation rules. In short, it turns generic model reasoning into reliable, context-aware enterprise actions.

Core responsibilities of the intelligence layer

- Context mapping: understand users, teams, and organizational data topology.

- Model orchestration: select, combine, and swap model providers without breaking integrations.

- Deep connectors: integrate with collaboration, ticketing, CRM, and document systems.

- Permissions-aware retrieval: return only the information a user is authorized to see.

- Evidence and citation: verify model outputs against source documents and generate references.

- Auditability and governance: log interactions, enforce policies, and enable compliance reviews.

Why do enterprises need a neutral intelligence layer?

There are three practical reasons enterprises benefit from a neutral AI layer:

1. Avoid vendor lock-in

Leading model providers innovate quickly. A neutral layer enables organizations to switch between or combine models as features evolve, without rewriting connectors or retraining context mapping. This flexibility protects long-term investments and allows IT teams to adopt best-of-breed capabilities while keeping integrations stable.

2. Operationalize governance and compliance

Large organizations cannot simply dump internal data into a model and hope for secure outcomes. A governance-first middleware ensures that retrieval respects access policies, that responses are verifiable, and that logs support audits and data-residency requirements. This permissions-aware approach turns risky pilots into deployable solutions.

3. Make models useful for business workflows

Models are powerful but generic. The intelligence layer provides the missing business context — product knowledge, team roles, and workflow semantics — so AI can perform real tasks like drafting customer responses, creating Jira tickets with the right metadata, or summarizing confidential reports with proper citations.

How does an intelligence layer work in practice?

At a high level the layer implements three integrated capabilities: model access, connectors, and governance. Each plays a distinct role but must operate together to create trustworthy AI-enabled workflows.

Model access and orchestration

Rather than hard-coding a single model, the intelligence layer acts as an abstraction. It routes requests to different model providers depending on cost, latency, capability, and data sensitivity. Orchestration can include:

- Selection rules (e.g., low-cost model for simple summaries, higher-capability model for long-form reasoning).

- Fallbacks and ensembles (combine outputs to improve accuracy).

- Runtime swapping (switch providers without breaking downstream connectors).

Deep connectors and context mapping

Connectors turn scattered enterprise data into usable context. The intelligence layer must integrate with collaboration tools, ticketing systems, cloud drives, and CRM platforms to map where information lives and how it flows between teams. Deep integration enables agents to act inside those tools while preserving metadata, history, and audit trails.

For practical examples and best practices on agent management and integrations, see our coverage of AI Agent Management Platform: Enterprise Best Practices and how enterprise agents are reshaping the startup landscape in Enterprise AI Agents: The Next Big Startup Opportunity.

Permissions-aware retrieval and governance

Retrieval must be identity-aware. The intelligence layer evaluates who is asking, what they can access, and what context is required to answer. Policies dictate whether certain sources are eligible for retrieval or whether content must be redacted. Governance features include:

- Access filters based on roles and document policies.

- Line-by-line citations and source linking for transparency.

- Automated logging and retention controls for audits.

What are the common implementation patterns?

Enterprises typically adopt one of the following patterns depending on maturity and risk appetite:

1. In-house middleware

Build a custom layer tailored to existing systems and compliance needs. This gives maximum control but requires engineering resources and ongoing maintenance.

2. Neutral third-party layer

Use a vendor-neutral platform that provides connectors, model orchestration, and governance out of the box. This accelerates deployment and reduces integration burden while preserving the ability to switch model providers.

3. Integrated vendor stack

Adopt a single vendor’s assistant and ecosystem. This can simplify rollout but risks vendor lock-in and may limit flexibility to combine models or enforce custom governance policies.

How do you stop hallucinations and ensure accuracy?

Hallucinations remain a core operational risk. A robust intelligence layer minimizes this risk with a combination of verification strategies:

- Source grounding: verify generated claims against indexed documents and return citations.

- Answer synthesis with evidence: include line-by-line references linked to documents.

- Human-in-the-loop workflows: flag uncertain responses for review before finalizing actions.

- Output checks: apply rule-based validators to filter implausible or sensitive responses.

What should leaders ask before deploying a layer?

Decision-makers should evaluate potential intelligence layers against operational, security, and strategic criteria. Key questions include:

- Does the platform respect role-based access and regulatory requirements?

- Can it integrate with our core systems (ticketing, CRM, drive, chat) with deep connectors?

- Is model selection and switching simple and auditable?

- How does it present evidence and citations for generated outputs?

- What are the logging, retention, and audit capabilities?

Deployment checklist

- Inventory sensitive data sources and classify access rules.

- Map critical workflows where AI can add measurable value.

- Choose connectors and define integration scope for the pilot.

- Define governance policies and citation requirements before training or indexing.

- Run small pilots with human review and measure accuracy and time savings.

Can platform giants displace a neutral intelligence layer?

Large platform vendors control many user touchpoints and may integrate AI more deeply into productivity apps. However, enterprises frequently prefer neutral infrastructure that preserves choice, meets compliance needs, and integrates with heterogeneous stacks. A neutral layer that standardizes connectors, access rules, and evidence generation can coexist with platform assistants by serving as the authoritative source of enterprise context and governance logic.

Risks and challenges to plan for

Building or adopting an intelligence layer is not without challenges. Common pitfalls include:

- Underestimating the effort required to build reliable connectors and context maps.

- Failing to align stakeholders across security, legal, and business teams early in the project.

- Over-relying on a single model provider without fallback or auditing mechanisms.

- Insufficient logging and retention policies that complicate compliance reviews.

Where to start: practical next steps

Start with a narrowly scoped pilot that targets a high-value workflow. Use the pilot to validate model routing, test permissions-aware retrieval, and iterate on citation formats. As the pilot proves value, expand connectors and automate more tasks while preserving manual review where risk remains high.

For a deeper look at security patterns for agentic systems, including preventing rogue agents, see our analysis on Agentic AI Security: Preventing Rogue Enterprise Agents.

Conclusion

An enterprise AI intelligence layer is becoming essential infrastructure for organizations that want to employ generative models responsibly and productively. By combining model orchestration, deep connectors, and permissions-aware governance, neutral middleware turns generic AI capabilities into business-ready tools. Companies that invest in this layer now will gain flexibility, stronger compliance posture, and the ability to scale AI across complex enterprise workflows.

Take action

Ready to evaluate an intelligence layer for your organization? Start by mapping the top three workflows where AI could save the most time and identifying the data sources those workflows depend on. If you want a checklist and a deployment playbook for a pilot, subscribe to our newsletter or contact our editorial team for a step-by-step guide.