India’s $200B AI Infrastructure Push: Strategy, Incentives, and Risks

India has launched an ambitious effort to attract more than $200 billion in artificial intelligence infrastructure investment over the next two years, aiming to position the country as a global hub for AI compute, services, and applications. This plan combines tax breaks, state-backed venture capital, expanded shared compute, and targeted policy changes designed to draw data centers, semiconductor capacity, and AI product development onto Indian soil.

What is India’s plan to attract $200 billion in AI investment?

The government’s multi-pronged strategy focuses on three core pillars: build compute capacity, catalyze capital flows, and enable applied AI innovation. Key elements include:

- Tax and fiscal incentives to lower operating costs for hyperscale data centers and chip fabs.

- State-backed venture and credit facilities that de-risk early-stage deep-tech investments and infrastructure projects.

- Expanded shared compute through national missions to provide GPUs and other accelerators to researchers and startups.

- Regulatory clarity and streamlined approvals to accelerate construction and reduce time-to-market.

The government has signaled immediate actions to expand public compute pools beyond the existing capacity of tens of thousands of GPUs, while also preparing a second phase of the national AI mission centered on research, innovation, and broader access to shared resources.

Why now? Strategic timing and global context

Several trends make this moment critical for India’s ambitions. First, global demand for AI compute is surging, driven by large foundation models, multimodal systems, and enterprise AI adoption. Second, supply-chain reconfiguration and geopolitical considerations are prompting companies to diversify data center and chip investments beyond a handful of regions. Third, rising costs and capacity constraints in traditional hubs are creating openings for new entrants that can offer scale, competitive pricing, and supportive policy frameworks.

India’s pitch to investors is that it can combine a large, skilled engineering workforce with cost advantages, policy incentives, and a growing cloud ecosystem—creating an attractive alternative for companies seeking to expand AI compute footprints.

How will the $200B break down?

While specific allocations will evolve, the government and industry stakeholders are signaling a split roughly along two lines:

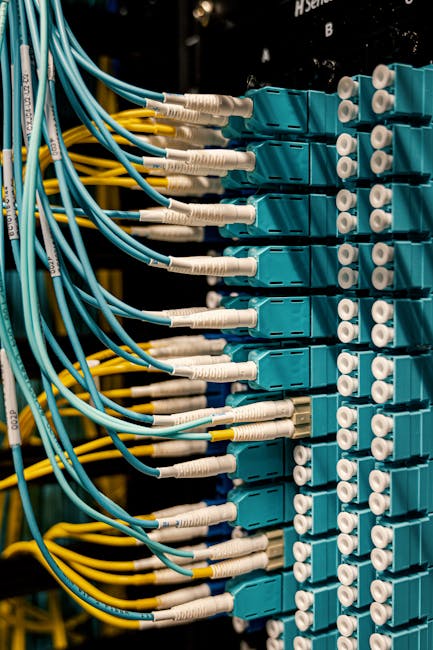

- AI infrastructure (majority): Data centers, networking, power and cooling, semiconductor fabs and packaging, and supporting logistics. A significant share covers hyperscale builds and regional cloud expansion.

- Deep-tech and applied AI (smaller but strategic): Startups, R&D labs, model development, and enterprise AI implementations aimed at capturing higher value-add beyond raw compute.

Estimates suggest about $70 billion of the projected sum reflects investments already announced or committed by large cloud providers and technology firms. The remaining capital would target new builds, domestic semiconductor capacity, and venture funding for AI-first companies.

What incentives and policy tools are being offered?

To convert ambition into investments, India plans a coordinated set of incentives and policy tools, including:

- Tax holidays and accelerated depreciation for data center equipment.

- Higher revenue thresholds and extended benefits for qualifying AI startups to foster scale and longevity.

- Direct funding and matching grants for high-risk deep-tech research and capital-intensive projects.

- Streamlined land, power, and water procurement processes for hyperscale facilities.

These measures are intended to reduce both capital expenditure and operating cost hurdles, while encouraging venture capital and corporate funds to back domestic AI product development.

How will India expand shared compute for researchers and startups?

Central to the plan is broadening access to shared compute clusters—national GPU pools that researchers, universities, and startups can use on-demand. Recent steps have increased publicly available GPU units, and further increments are planned in the coming weeks to accelerate model training and applied experiments. The second phase of the national AI mission will emphasize R&D grants, model development ecosystems, and the diffusion of tools to non-technical sectors.

Benefits of shared compute

- Lowered entry barriers for startups that cannot afford private clusters.

- Faster research cycles for academic and industrial labs.

- More equitable geographic distribution of AI development beyond major tech hubs.

What are the main execution challenges?

Compressing years of infrastructure build-out into a short time frame presents practical and environmental challenges. The primary constraints include:

- Power availability and reliability: AI data centers are energy-intensive; ensuring steady, high-capacity power without destabilizing local grids is essential.

- Water for cooling: Many cooling approaches require substantial water; siting facilities where water resources and sustainability considerations align is critical.

- Land, permits and logistics: Procuring suitable land and approvals at speed for large campuses can be a bottleneck.

- Skilled personnel: Building and operating advanced data centers and semiconductor lines requires specialized talent that must be scaled.

Addressing these constraints will require coordinated planning across central and state governments, utilities, and private partners to align incentives with sustainable infrastructure choices.

How is sustainability being considered?

Officials are emphasizing India’s growing clean-power mix as a competitive advantage. With a rising share of solar, wind, and other low-carbon generation, the government argues that new data centers can tap greener energy sources—reducing lifecycle emissions for AI compute operations. However, sustainable deployments will require:

- Grid upgrades and energy-storage solutions to manage intermittent renewables.

- Innovations in cooling that reduce water use and improve energy efficiency.

- Transparent reporting and incentive structures that reward lower-carbon compute footprints.

What does this mean for startups and investors?

For venture capital and founders, India’s push signals an opportunity to capture higher-value parts of the AI value chain. Policy changes raising startup revenue thresholds and extending support aim to help early-stage companies scale to larger outcomes. Investors will be watching whether the policy package reduces time-to-market and materially lowers costs compared with other regions.

Startups focused on domain-specific AI, edge deployment, enterprise automation, and chip-software co-design stand to benefit most if public compute, funding, and procurement policies align.

How will global cloud and AI firms play a role?

Global cloud providers have already announced capacity expansions in India, which creates a foundation for further investment. Those companies bring capital, systems expertise, and enterprise sales channels—but India’s aim is also to pull more of the value chain domestically by incentivizing chip manufacturing, local R&D, and startup ecosystems that build on shared compute.

Which related developments should readers watch?

Key items to monitor over the next 6–24 months include:

- New announcements of hyperscale data center campuses and regional cloud regions.

- Policy finalizations around tax incentives, land allocation, and startup benefits.

- Progress on shared compute availability and the launch of second-phase national AI initiatives.

For deeper context on government incentives and the broader policy conversation, see our coverage of India AI Data Centers: Tax Incentives to Drive Cloud Growth and the AI Impact Summit India: Driving Investment & Policy. For analysis of capital flows into AI infrastructure, refer to AI Data Center Spending: Are Mega-Capex Bets Winning?

Recommendations: How policymakers and industry can increase the odds of success

To translate ambition into durable outcomes, the following actions will be important:

- Coordinate national and state planning for power, water, and logistics to avoid local bottlenecks.

- Design time-bound incentives that reward sustainable practices and long-term domestic value creation.

- Invest in workforce development programs focused on data center operations, chip packaging, and AI engineering.

- Scale shared compute and research grants to lower barriers for startups and academic labs.

- Encourage public-private partnerships to share risk on capital-intensive projects like fabs and hyperscale campuses.

Conclusion

India’s $200 billion AI infrastructure push is a strategic bid to capture a larger share of the global AI economy. If implemented effectively, it could accelerate domestic AI capabilities, create jobs, and diversify global compute capacity. Execution risks are real—power, water, permitting and workforce constraints will test timelines—but the combination of incentives, public compute expansion, and a robust startup ecosystem could make India a major node in the next phase of AI infrastructure.

Call to action

Stay informed as this story develops: subscribe to Artificial Intel News for timely analysis on AI infrastructure, policy, and investment. If you’re a founder, investor, or policymaker working in AI infrastructure in India, contact our editorial team to share updates or insights that help our readers understand the on-the-ground reality.