AI-Assisted Coding Hits a Tipping Point Today

Earlier this year Spotify announced an internal shift that many in engineering and product leadership are calling a watershed moment for software development: senior engineers at the company reportedly paused traditional hands-on coding thanks to an AI-driven workflow that automates significant portions of development and deployment. The result has been a measurable uptick in feature velocity and a new focus on how proprietary datasets and model retraining can fuel competitive differentiation.

Has AI-assisted coding reached a tipping point?

Short answer: possibly. The Spotify example illustrates a concrete, production-ready use case where generative AI models are deeply embedded into the developer lifecycle — from bug fixes to pushing test builds remotely. That progression from experimental tooling to everyday engineering assistance is what people mean by a “tipping point”: AI moves from helper to workflow enabler.

What changed at Spotify

According to the company, its engineering teams are using an internal system that orchestrates AI-assisted code generation, testing, and remote deployment. The platform enables engineers to request fixes or features — even from a mobile device — and receive runnable app builds they can review and merge. Spotify credits the system with helping ship dozens of product updates in the last year, including AI-driven features for audiobooks and other content-specific capabilities.

Why this matters for product velocity

AI-assisted coding can accelerate iteration in three tangible ways:

- Faster triage and fixes: Automated diagnosis and patch generation reduce mean time to resolution.

- Automated build and test cycles: Generative models can create code and trigger continuous integration flows, delivering testable artifacts quickly.

- Higher throughput for product teams: Product managers can request small feature changes without lengthy handoffs, compressing the feedback loop between idea and shipped feature.

These are not futuristic promises — they are observable productivity gains when a company integrates AI into engineering rituals and CI/CD pipelines.

How does AI-assisted coding actually work in production?

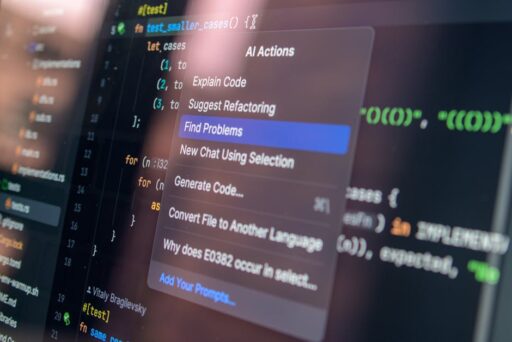

At its core, modern AI-assisted coding combines several components:

- Prompted code generation: Developers describe the intention (bug fix, feature, refactor) in natural language and receive candidate code snippets.

- Automated testing and verification: Generated code is run against unit and integration tests or specialized static analysis to filter unsafe changes.

- Sandboxed deployments: The system builds pre-release artifacts and distributes them to reviewers for QA, often via chat or internal tooling.

- Human-in-the-loop merges: Engineers retain final approval, merging vetted changes into production when satisfied.

Combined, these steps convert human intent into safe, testable code faster than traditional cycles, while still preserving engineering oversight.

What unique advantages do content companies have with AI datasets?

One striking takeaway from Spotify’s approach is the competitive value of proprietary, domain-specific datasets. For music and audio platforms, there are countless subjective and context-dependent questions — what makes good workout music, which tracks fit a language-specific playlist, or how fans react to a remix. These signals come from user behavior, editorial curation, and content metadata that generic public datasets do not capture at scale.

When platforms train or fine-tune models on that internal data, they create AI behaviors and recommendations tailored to their audiences. That can be harder for third parties to replicate than models trained on only broadly available resources.

Examples of dataset-driven advantage

- Personalized audio features that reflect regional and cultural preferences.

- Editorially informed recommendations that blend user behavior with human curation.

- Improved content search and intent understanding for niche queries that lack single factual answers.

What are the risks and governance challenges?

Rapid adoption of AI-assisted coding introduces governance, safety, and operational risks that organizations must address deliberately:

- Quality regressions: If automated code generation bypasses rigorous testing, subtle bugs may reach production.

- Security exposure: Generated code could unintentionally introduce vulnerabilities or copy patterns that violate licensing constraints.

- Skill atrophy: Over-reliance on automation may erode developers’ deep knowledge of systems architecture over time.

- Model drift and bias: Proprietary datasets can amplify local biases or stale assumptions unless models are retrained and audited regularly.

- Operational complexity: Integrating AI into CI/CD and deployment pipelines requires engineering investment and clear rollback procedures.

Best practices to manage AI-assisted coding safely

- Keep humans in the loop for code review and final merges.

- Require automated tests, security scans, and reproducible builds before merging AI-generated changes.

- Maintain an auditable trail of prompts, generated artifacts, and model versions for compliance and debugging.

- Train teams on emergent risks and encourage pair-programming sessions to preserve institutional knowledge.

Companies that treat AI as an augmenting technology, rather than a replacement for software engineering discipline, are more likely to benefit sustainably.

How will AI-assisted coding change engineering roles?

Rather than eliminating engineering positions wholesale, AI-assisted coding is reshaping job responsibilities and the skills that matter most:

- Higher-value engineering: Engineers may shift from routine implementation tasks to system design, architecture, and complex problem solving.

- Prompt engineering and validation: Crafting precise prompts and interpreting model outputs becomes a core capability.

- Model and dataset stewardship: Teams will need experts to manage model performance, bias mitigation, and retraining strategies.

- DevOps and ML-Ops intersection: Integrating AI into CI/CD lines up with the rise of infrastructure engineers who understand both software delivery and model lifecycle management.

Organizational implications

Leadership should expect to invest in reskilling, updated hiring criteria, and governance teams that can bridge engineering, ML, and compliance functions. This is consistent with broader shifts we’ve covered about agentic AI in enterprise development and security—see our coverage of Agentic Software Development: The Future of AI Coding and AI Agent Management Platform: Enterprise Best Practices.

Will AI-generated music and creative outputs change content moderation?

Content platforms that support user- or AI-generated creative work face unique trust and safety challenges. In music and audio, platforms must balance artist rights, attribution, and spam prevention while enabling new creative workflows. The right governance framework includes provenance tagging, artist opt-in mechanisms, and robust content moderation pipelines that can scale.

Questions platforms must answer

- How will we attribute AI-assisted creations so artists and rights-holders are compensated?

- What automated and human review processes are needed to filter spam and abusive content?

- How will we allow creators to surface process disclosures — letting listeners know when a track was AI-assisted?

Addressing these questions proactively reduces downstream legal and reputation risk and helps preserve trust with both creators and listeners.

How should engineering teams prepare for wider adoption?

Teams that want to adopt AI-assisted coding should prioritize three initial moves:

- Establish a safety-first CI workflow: Integrate automated testing, static analysis, and security checks into any AI-to-code pipeline.

- Build dataset and model governance: Track training data sources, label drift, and model versions; schedule regular audits and retraining cadence.

- Start small, measure impact: Pilot AI-assisted flows in non-critical code paths, measure cycle time improvements, bug rates, and developer satisfaction before scaling.

For teams building AI-enabled developer tools, infrastructure matters. See our piece on AI App Infrastructure: Simplifying DevOps for Builders for practical advice on the plumbing required to run safe, repeatable AI-driven deployments.

What outcomes should leaders expect in the next 12–24 months?

Adoption will vary by industry and company size, but likely outcomes include:

- Broader use of AI for routine engineering tasks and faster iteration on user-facing features.

- Higher demand for cross-functional roles that combine ML ops, security, and developer experience.

- Increased investment in proprietary datasets as a source of differentiation, especially for content platforms.

- More mature governance frameworks and toolchains to ensure safe, auditable AI usage.

Final takeaways

Spotify’s experience is a useful case study of what happens when an organization treats AI as a first-class component of its engineering toolchain. The outcome is not automatic: it requires deliberate infrastructure, governance, and a commitment to preserving human oversight. When done right, AI-assisted coding lowers friction across the development lifecycle, unlocks new product velocity, and makes proprietary behavioral datasets more valuable.

Next steps for leaders

If you lead engineering or product teams, start by piloting AI-assisted workflows in low-risk areas, instrumenting everything you measure, and creating clear review gates for production merges. The technology is already changing how products are built — the companies that pair it with rigorous engineering and governance will capture the benefits.

For more on how agentic AI is reshaping development practices and enterprise safety, check out our related coverage of Agentic Software Development and Agentic AI Security.

Ready to adapt your engineering org?

Join the conversation: assess your CI/CD readiness, pilot an AI-assisted coding stream, and build a governance roadmap that balances speed with safety. Sign up for Artificial Intel News’ newsletter to get practical playbooks and case studies directly to your inbox.

Call to action: Subscribe now for weekly insights and step-by-step guides to safely scale AI-assisted coding across your organization.