Agentic Software Development: Why AI Agents Are Rewriting How We Code

Agentic software development—where multiple autonomous AI agents collaborate to design, implement, test, and maintain software—is rapidly shifting the boundaries of what engineering teams can deliver. What began as single-model coding assistants is evolving into multi-agent systems that distribute tasks, run background automations, and integrate seamlessly into developer workflows. This article breaks down the technology, benchmarks, practical benefits, adoption steps, and the trade-offs teams must consider when moving to agentic AI coding.

What is agentic software development and why does it matter?

At its core, agentic software development uses autonomous or semi-autonomous AI agents to perform discrete parts of the engineering lifecycle. Rather than one assistant responding to prompts, agentic systems spin up specialized subagents—each focused on tasks like design, code generation, refactoring, testing, and CI/CD orchestration. These agents can operate in parallel, hand off artifacts to one another, and run scheduled automations so developers see aggregated results in a review queue.

Key characteristics of agentic systems

- Parallelism: Multiple agents tackle tasks simultaneously, reducing time-to-prototype.

- Specialization: Subagents are tuned for roles—bug triage, unit tests, API wiring, docs—improving quality and consistency.

- Automations: Background jobs (linting, dependency updates, regression checks) run on schedules and present curated outputs.

- Personality and context: Agents can adopt working styles—pragmatic, exploratory, conservative—so interactions match team preferences.

How are modern tools implementing agentic workflows?

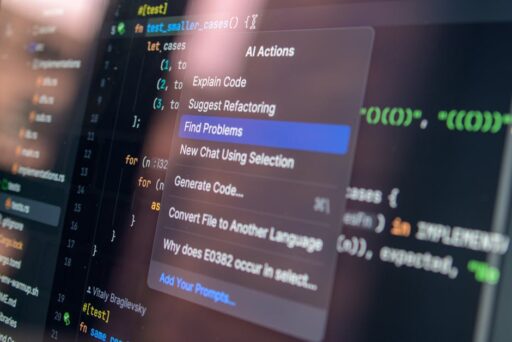

Leading platforms are integrating multi-agent orchestration directly into developer environments. Newer apps provide interfaces for creating agent teams, assigning tasks, and configuring background workflows that run without constant human direction. Common features include agent memory, shared project context, and queue-based review systems so developers retain control while benefiting from automated throughput.

Examples of agentic capabilities

- Automated bug triage that opens issues, suggests fixes, and creates patch branches for review.

- Parallel test-generation and execution to validate changes across multiple environments.

- Continuous integration automations that proactively create pull requests for dependency upgrades and performance regressions.

Can agentic AI outperform traditional coding assistants?

Performance depends on model capability, integration quality, and user experience—not raw benchmark scores alone. While state-of-the-art models show strength on coding benchmarks, agentic workflows emphasize end-to-end productivity: the speed of iteration, the reliability of automated checks, and how much human time agents save. For many teams, agentic systems deliver larger practical gains because they reduce repetitive work and accelerate feedback loops.

Why benchmarks don’t tell the whole story

Benchmarks measure specific skills—problem solving, patch generation, or command-line tasks—but agentic usage spans coordination, tool use, and long-context reasoning. Two systems with near-equal benchmark performance can differ substantially in how they manage context, orchestrate subagents, or present results to developers. That discrepancy can make a big difference in day-to-day engineering velocity.

Benefits: Why engineering teams are adopting agentic approaches

Adopting agentic software development can unlock several measurable and qualitative improvements:

- Faster prototyping: Parallel agent work can produce usable feature proofs in hours rather than days.

- Reduced cognitive load: Agents handle repetitive tasks—formatting, dependency updates, basic test coverage—letting engineers focus on design and edge cases.

- Improved consistency: Specialized subagents apply standardized rules across repositories, leading to fewer integration surprises.

- Asynchronous collaboration: Background automations surface curated changes in review queues, making distributed teams more efficient.

Challenges and risks to manage

Despite the gains, agentic development introduces new risks and operational challenges teams must address:

Key risks

- Hallucinated code or incorrect fixes that pass basic tests but fail in production—requiring robust review policies.

- Security and IP exposure when using external models or shared memory—necessitating strict data governance.

- Orchestration complexity: Multiple agents can create tangled state flows unless interfaces and handoffs are well-defined.

How to adopt agentic software development: a practical roadmap

Transitioning to agentic workflows should be iterative and governed by measurable guardrails. Follow this practical roadmap to pilot and scale safely:

- Identify low-risk automation candidates: dependency updates, test generation, or documentation drafts.

- Run agents in observation mode: let them propose changes to a review queue without auto-merging.

- Define policy checks: static analysis, security scans, and mandatory human approval for sensitive changes.

- Measure velocity and quality: track time-to-merge, defect rates, and developer satisfaction.

- Gradually expand agent responsibilities: introduce bug triage, refactors, and scheduled maintenance automations.

Team roles and best practices

Successful adoption relies on cultural and procedural changes:

- Designate agent stewards to monitor agent outputs and maintain prompt templates and personalities.

- Standardize prompts and context bundles to reduce variance between runs.

- Create clear escalation paths when agents encounter ambiguous or risky choices.

Real-world examples and related thinking

Agentic concepts are gaining traction across the industry—from multi-agent orchestration to developer-facing integrations that bring agent teams into everyday workflows. For deeper technical context on agent teams and extended context windows, see the analysis of specialized agent teams and long-context models in our coverage of Anthropic’s recent research: Anthropic Opus 4.6: Agent Teams and 1M-Token Context. For enterprise automation patterns and plugin ecosystems that enable agentic workflows, read our piece on enterprise integrations: Anthropic Cowork Plug-ins: Enterprise Automation with Claude. And for a look at multi-agent tools that reframe collaboration, review our coverage of emerging agent orchestration platforms: Airtable Superagent: The Future of Multi-Agent AI Tools.

What development teams should measure

To assess agentic adoption, track both output and human-centric metrics:

- Time-to-first-prototype and time-to-merge

- Number of automated PRs and acceptance rates

- Regression incidents attributed to agent-generated code

- Developer time saved on routine tasks

- Security and compliance violations discovered before release

Future outlook: Where agentic development is heading

Expect continued refinement across three dimensions: model capability, orchestration UX, and enterprise governance. Models will become better at tool use and long-context reasoning, orchestration layers will simplify agent composition and error handling, and governance tools will provide transparent auditing for automated actions. Together these improvements will make agentic development safer and more broadly applicable beyond prototyping—into maintenance, observability, and even product management workflows.

Final recommendations for engineering leaders

Begin with targeted pilots, prioritize observability, and build policy-first guardrails. Treat agents as teammates that require onboarding, review cycles, and continuous tuning. With the right controls, agentic software development can dramatically increase engineering throughput while freeing humans to focus on strategy and complex problem solving.

Quick checklist to get started

- Choose a sandbox repo for pilot automations

- Define review and approval gates

- Instrument metrics for velocity and quality

- Assign agent stewards and update documentation

Want help adopting agentic workflows?

If your team is evaluating agentic software development, start with a focused pilot and measurable KPIs. We cover best practices, governance patterns, and tooling recommendations across our site—explore related analyses and case studies to build a safe, high-velocity plan for your organization.

Call to action: Subscribe to Artificial Intel News for weekly briefings on agentic AI, hands-on adoption guides, and enterprise-ready governance templates to accelerate your team’s transition to agentic software development.