The Future of AI Security: Navigating Emerging Threats

In a landmark move, AI security firm Irregular has secured $80 million in funding, backed by prominent investors including Sequoia Capital and Redpoint Ventures. This investment underscores the escalating importance of safeguarding AI technologies, especially as they become more integral to economic activities.

Co-founder Dan Lahav highlighted the dual nature of AI interactions—between humans and AI, and AI with AI—as potential breaking points in existing security frameworks. To address this, Irregular, initially known as Pattern Labs, has pioneered a comprehensive approach to AI vulnerability detection, which is becoming a benchmark in the industry.

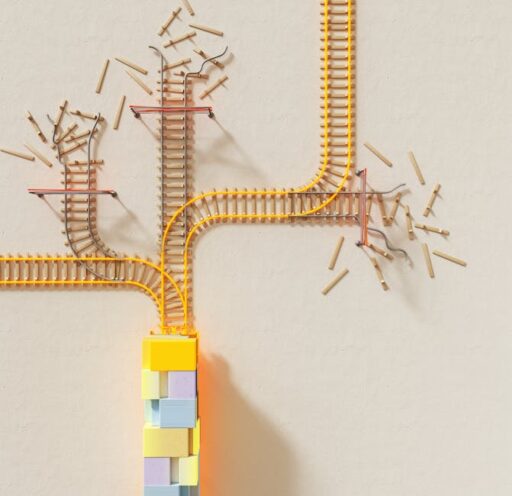

With a focus on preempting emergent threats, Irregular has developed intricate simulated environments to rigorously test AI models before deployment. This involves AI assuming both offensive and defensive roles, thereby identifying weaknesses in model defenses. Co-founder Omer Nevo emphasizes the importance of these simulations in assessing new models’ resilience.

As the AI sector advances, security remains a critical concern, particularly with the potential for these models to uncover software vulnerabilities. The capabilities of large language models pose new challenges, as they could be exploited for corporate espionage or other malicious activities. Irregular’s founders recognize the enormity of the task ahead, as securing these sophisticated models is a dynamic and ongoing process.

The recent funding round is not just a testament to Irregular’s current achievements but also a stepping stone towards future innovations in AI security, ensuring the technology’s safe integration into society.