Unlocking the Black Box: The Importance of Monitoring AI Reasoning Processes

The rapid advancement of artificial intelligence has brought about revolutionary changes across various sectors. Yet, as these technologies grow more complex and powerful, understanding their internal decision-making processes becomes increasingly critical. This is where Chain-of-Thought (CoT) monitoring comes into play, offering a promising approach to enhance transparency in AI reasoning models.

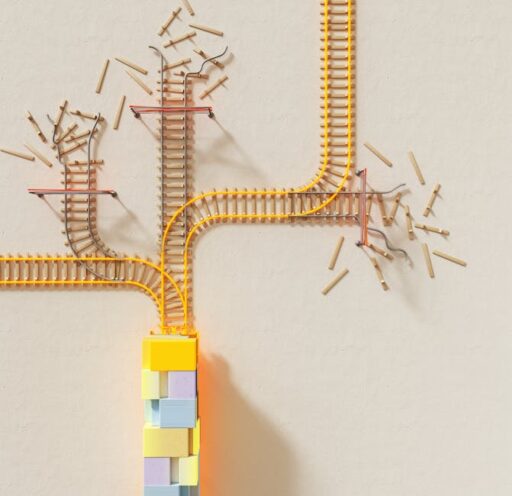

CoT monitoring refers to the scrutiny of the intermediate steps AI models take when reasoning through problems. Similar to how humans use notes to solve complex math problems, AI systems can externalize their thought processes, providing insights into their decision-making pathways. This method is essential not only for improving AI safety but also for fostering trust in AI systems.

Leading AI researchers from prominent organizations are advocating for more in-depth exploration and implementation of CoT monitoring. They argue that it could serve as a foundational safety measure, especially as AI agents become more widespread and their applications more critical.

The significance of CoT monitoring lies in its potential to offer rare visibility into AI systems’ internal workings. However, this transparency is not guaranteed to persist without dedicated efforts from the research community. There is an urgent call for AI model developers to investigate factors influencing CoT’s monitorability, ensuring that it remains a reliable tool for understanding AI behaviors.

Remarkable advancements have been made in AI performance, yet the comprehension of how these models arrive at their conclusions is still in its infancy. Companies and researchers are now urged to prioritize interpretability alongside performance, investing in methodologies like CoT monitoring to bridge this knowledge gap.

In essence, CoT monitoring could revolutionize our approach to AI safety and understanding. By fostering a deeper comprehension of AI reasoning processes, we not only enhance the reliability of these systems but also pave the way for more responsible and informed AI development.