Data-Efficient AI: Flapping Airplanes’ New Research Path

A new wave of research labs is tackling one of the most consequential bottlenecks in modern AI: the dependence on massive datasets. Flapping Airplanes, founded by a small team of creative researchers, has positioned itself as a lab committed to unlocking data-efficient AI—models that learn powerful capabilities from far less data than today’s large-scale systems. Their approach blends engineering pragmatism with inspiration from neuroscience, aiming for breakthroughs that could change the economics and reach of AI.

What is data-efficient AI and why does it matter?

Data-efficient AI refers to methods and architectures that achieve strong generalization and new capabilities using substantially smaller training datasets. Instead of relying on the ‘all-the-internet’ scale of data and massive compute, these systems emphasize sample efficiency, fast adaptation, and intelligent use of structured priors. Why this matters:

- Cost reduction: Smaller datasets and cheaper fine-tuning lower operational and financial barriers to deployment.

- New verticals: Domains like robotics, scientific discovery, and specialized enterprise workflows are often data-scarce—sample-efficient models can serve them.

- Faster iteration: Research cycles shorten when experiments don’t require massive compute and data collection.

- Privacy and sovereignty: Less reliance on large scraped corpora helps address privacy and regulatory concerns.

These potential benefits make data-efficient AI both a scientific and commercial priority. Flapping Airplanes has attracted substantial seed funding to pursue precisely these trade-offs: aiming for orders-of-magnitude improvements rather than incremental gains.

How does Flapping Airplanes approach the problem?

The lab centers its work on three guiding bets:

- Data efficiency is a tractable, high-impact scientific problem worth focused attention.

- Solving sample efficiency will deliver strong commercial value across robotics, enterprise, and scientific domains.

- A creative, relatively unconstrained team can reframe orthodox assumptions and explore novel algorithms rapidly.

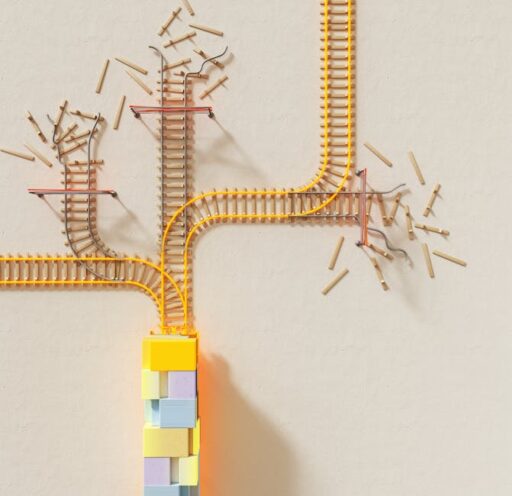

That framework leads to a research style that emphasizes fresh viewpoints over incremental tweaks. The team deliberately blends inspirations from biological learning systems with practical engineering constraints of silicon and modern infrastructure. Their metaphor—building a ‘flapping airplane’ rather than a bird—captures that balance: take inspiration from biology without expecting identical solutions.

What lessons can neuroscience offer AI developers?

Researchers at the lab often point to the human brain as a useful existence proof: complex, adaptive intelligence emerges from a constrained, resource-limited substrate. But the brain and silicon have very different cost structures—computation, latency, and data movement all mean different trade-offs on chips than in neurons. That implies two important lessons:

- Seek architectures and learning rules that exploit hardware realities rather than mimic biology exactly.

- Adopt hybrid inspiration: borrow mechanisms that deliver robustness or efficiency, but re-implement them in ways suited to modern compute.

With this mindset, small-scale experiments can validate radically different ideas quickly. Many speculative changes either fail fast or reveal unanticipated strengths before significant scale-up is required.

How could data-efficient AI change real-world applications?

Practical impact falls into two buckets: expanding where AI can be applied, and making deployment easier and faster in domains that already use AI. Examples:

- Robotics: Robots typically operate in highly variable, physical environments with limited labeled data. Sample-efficient learning could let robots acquire skills with far fewer demonstrations or even rely more heavily on simulation-to-real transfer.

- Scientific discovery: Many research domains produce expensive, slow, or unique datasets. Models that learn more from fewer experiments could accelerate R&D cycles across chemistry, biology, and material science.

- Enterprise customization: Companies often need models tailored to internal documents or workflows. A model that adapts with minimal examples makes enterprise integration cheaper and quicker.

These use cases intersect with infrastructure and hardware trends. Innovations in chip design and compute efficiency directly influence how practical data-efficient methods become. For context on hardware-driven AI evolution, see analysis of AI chip automation and hardware acceleration in our coverage of AI chip design trends: AI Chip Design Automation: Ricursive Accelerates Hardware. Cost and capital allocation for data center infrastructure also affect the economics of any data strategy; our piece on AI data center spending explores that trade-off: AI Data Center Spending: Are Mega-Capex Bets Winning?.

Why sample efficiency matters for enterprise agents

Enterprise agents and automation systems often need to operate in narrow, regulated contexts with sensitive data. The ability to adapt quickly, securely, and with small labeled sets complements work on agent management and security. For related considerations around safe deployment and governance, see our coverage of agent security best practices: AI Agent Security: Risks, Protections & Best Practices.

What research strategies accelerate progress in data efficiency?

Flapping Airplanes emphasizes an experimental program that mixes theoretical insight with pragmatic evaluation. Key elements include:

- Small-scale hypothesis testing: Rapidly rule out approaches by validating basic assumptions on lightweight hardware before costly scale-ups.

- Cross-disciplinary teams: Combine neuroscience insight, algorithmic theory, and systems engineering to evaluate trade-offs holistically.

- Focus on adaptation: Prioritize post-training sample efficiency—few-shot adaptation, domain transfer, and on-device learning.

- Tooling for reproducibility: Build infrastructure that lets experiments be shared, reproduced, and iterated quickly.

Because many promising interventions either succeed at small scale or fail outright, the lab’s model allows efficient pruning of dead ends. Successful signals can then be scaled up thoughtfully.

How realistic are 1000x gains in data efficiency?

Bold claims require careful scrutiny. The lab frames ambitious targets—orders-of-magnitude improvements—as exploratory goals rather than guaranteed outcomes. Their reasoning rests on three hypotheses:

- There exists a continuum between statistical pattern matching and deep conceptual reasoning; shifting an algorithm along that axis may improve reasoning with less data.

- Post-training adaptation can be vastly improved so models acquire new skills from minimal examples.

- Many practical limitations attributed to hardware are actually data problems; better learning algorithms could unlock the same hardware potential with far less data.

Those hypotheses are scientifically compelling and measurable. Even partial progress—10x or 100x improvements—would dramatically broaden AI’s applicability.

What organizational choices support deep research?

Commercial pressures can derail foundational research. Flapping Airplanes’ founders argue that avoiding early large enterprise contracts and preserving a tight research focus are critical for pursuing radical ideas. That doesn’t mean commercialization is off the table—rather, the lab intends to prioritize research until core insights are proven, then translate them into products or partnerships that deliver real-world value.

Hiring for creativity

The lab prioritizes creativity over pedigree. Researchers who consistently teach the team something new and can reframe problems are highly valued. This emphasis on fresh perspectives allows the group to challenge entrenched assumptions and experiment with unconventional solutions.

What are the broader implications for the AI ecosystem?

If data-efficient methods deliver on their promise, the ripple effects would be broad:

- Lower barriers to entry for startups and organizations without massive data resources.

- Faster, more affordable adaptation of AI to regulated and specialized domains.

- New scientific and engineering capabilities enabled by models that reason better from less data.

These outcomes would make AI both more inclusive and more focused on high-impact, domain-specific problems—shifting some attention away from pure scale toward smarter, more adaptable systems.

How can researchers and practitioners engage?

There are practical ways the community can participate and benefit from a surge in data-efficient research:

- Share curated, high-quality datasets that encourage few-shot learning benchmarks.

- Collaborate on reproducible small-scale experiments to test novel learning rules.

- Prioritize evaluation metrics that capture adaptation, robustness, and resource efficiency rather than only raw performance on massive benchmarks.

Conclusion and next steps

Data-efficient AI is an attractive frontier because it combines deep scientific questions with tangible commercial payoffs. Labs that pair ambitious hypotheses with careful, small-scale validation can explore more radical alternatives to current architectures while remaining grounded in practical hardware and deployment constraints. Flapping Airplanes exemplifies this pattern: inspired by biological intelligence, but focused on solutions compatible with modern compute.

If you follow AI strategy, hardware trends, or applied AI in robotics and science, the trajectory of data-efficient research is worth watching. Progress here could reshape which problems AI can solve and who gets to apply it.

Call to action

Want timely coverage of data-efficient AI and other research-driven AI trends? Subscribe to Artificial Intel News for analysis, interviews, and practical breakdowns of the tech shaping tomorrow’s AI. If you’re a researcher or founder working on sample-efficient methods, reach out through our contact page to share findings or collaboration ideas—together we can accelerate meaningful, deployable breakthroughs.