Codex Spark: Fast, Low-Latency Agentic Coding Tool

OpenAI’s latest release, Codex Spark, represents a deliberate pivot toward low-latency, developer-focused AI. Positioned as a lightweight companion to larger agentic coding models, Codex Spark emphasizes rapid inference, tight interaction loops, and smoother real-time collaboration. The model is notable not only for its software design but also for the deeper integration of specialized hardware to deliver a different class of user experience.

What is Codex Spark and how does it speed up coding?

Answer (featured snippet):

- Codex Spark is a lightweight, low-latency variant of OpenAI’s agentic coding models optimized for fast inference and iterative workflows.

- It is designed for real-time collaboration, rapid prototyping, and short, interactive tasks rather than long-running batch reasoning.

- Performance gains come from model architecture choices and the use of dedicated hardware that reduces response latency.

Why low-latency models matter for developers

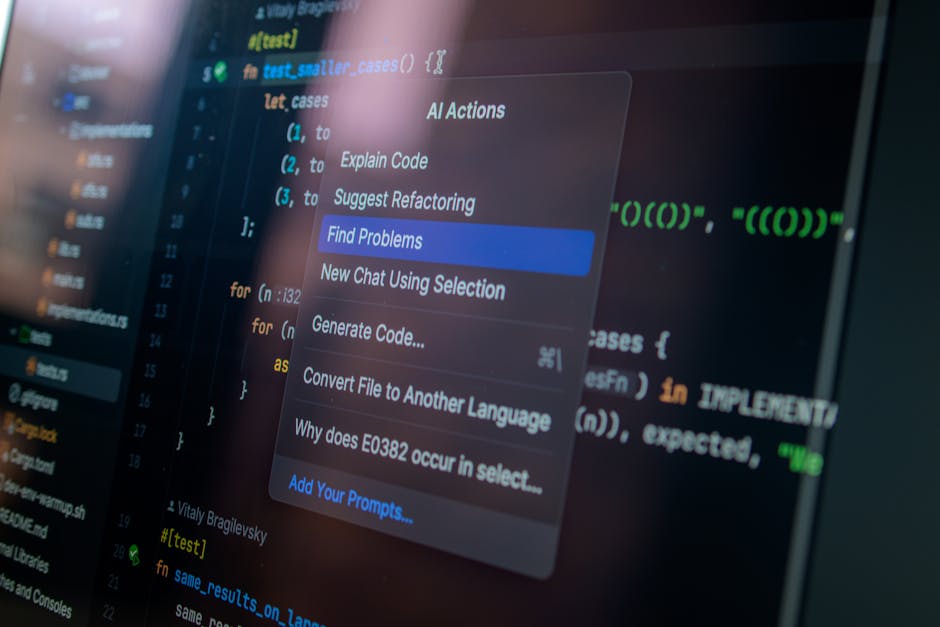

Developer productivity is shaped by interaction speed. When AI assistants return responses instantly, the feedback loop tightens: developers can test snippets, refine prompts, and iterate on design decisions without context switching. Codex Spark targets that use case — acting as a daily productivity driver for coding tasks like function drafting, refactoring suggestions, inline debugging hints, and rapid prototyping.

Longer-form, heavy reasoning tasks still belong to more capable, higher-latency models. Codex Spark’s value proposition is complementary: it accelerates the frequent, short bursts of work where speed and responsiveness directly improve flow and outcome.

How hardware-software integration unlocks faster inference

Fast inference at scale requires more than a trimmed model. It demands hardware-software co-design. Codex Spark is paired with a wafer-scale inference engine that prioritizes throughput and latency for small-to-medium-sized model runs. By optimizing memory access patterns, kernel performance, and on-chip communication, these systems deliver milliseconds-to-sub-second responses that feel native in a developer IDE.

Two takeaways for product and engineering teams:

- Investing in low-latency inference often yields outsized UX improvements for interactive features.

- Choosing the right deployment profile — lightweight models on fast inference hardware versus heavyweight models for deep reasoning — creates a more consistent user experience.

Codex Spark in practical workflows

Codex Spark is targeted at tasks where immediate feedback matters. Typical workflows include:

- Interactive pair programming and inline code suggestions.

- Rapid prototyping of API integrations and function stubs.

- Quick unit test generation and lightweight refactors.

- Real-time collaboration sessions where multiple developers iterate on a design together.

For examples of how agentic coding is changing development workflows, see our coverage on AI-Assisted Coding Hits a Tipping Point Today and Agentic Software Development: The Future of AI Coding.

What distinguishes Codex Spark from larger agentic models?

There are three key dimensions of difference:

- Latency vs. Depth: Codex Spark trades depth of chained reasoning for dramatically lower latency, making it ideal for short interactive cycles.

- Compute profile: Spark is tuned to run efficiently on inference-optimized chips, enabling real-time responses with a smaller compute footprint per request.

- Use-case fit: Spark targets day-to-day coding productivity rather than long-running code generation or heavy verification workflows.

Security, reliability, and governance considerations

Faster inference does not eliminate the need for guardrails. Organizations adopting Codex Spark should assess:

- Security boundaries for code generation (secrets, proprietary APIs, or internal logic).

- Audit and logging for generated code, particularly in regulated domains.

- Fallback strategies for when deeper reasoning or verification is required — for example, offloading to a stronger model for audit-ready tasks.

A robust deployment combines the immediacy of low-latency models with the auditability and depth of larger systems when necessary.

How enterprises can adopt low-latency coding assistants

Enterprises should approach adoption with a phased plan that balances experimentation and control. A practical rollout might look like:

- Pilot within a small engineering team to validate the UX and measure productivity impact.

- Define security policies for generated code and ensure scanning/CI integration.

- Measure developer experience metrics (time-to-first-commit, frequency of interactive use) and iterate.

- Scale to broader teams with role-based access and change management.

For infrastructure teams, integrating low-latency inference often ties into broader platform work such as AI app delivery and DevOps tooling. Our analysis on AI App Infrastructure: Simplifying DevOps for Builders offers practical guidance on building that stack.

Common use cases and example prompts

Codex Spark excels when prompts are concise and the task is bounded. Example use cases and sample prompts:

- Generate a stub: “Write a TypeScript function to validate email addresses using regex and return a boolean.”

- Refactor a block: “Simplify this loop into a functional map/filter chain.”

- Write a unit test: “Create Jest tests for the calculateDiscount function covering edge cases.”

- Explain code: “Explain what this SQL query does in two sentences.”

Best practices for prompt design

- Be specific and concise to keep execution time low.

- Prefer structured prompts (input, expected output, constraints) for deterministic results.

- Chain tasks externally — use Spark for iteration and a stronger model for final validation or optimization.

Potential challenges and limits

Codex Spark’s strength — low latency — is also its limitation. Tasks that require multi-step planning, deep context across many files, or thorough formal verification still demand larger models and more compute. Additionally, productionizing AI-generated code requires continuous validation: static analysis, security scanning, and human review remain essential.

Operationally, integrating specialized hardware can introduce new vendor dependencies and operational complexity. Teams need to weigh the UX benefits against procurement, maintenance, and scalability considerations.

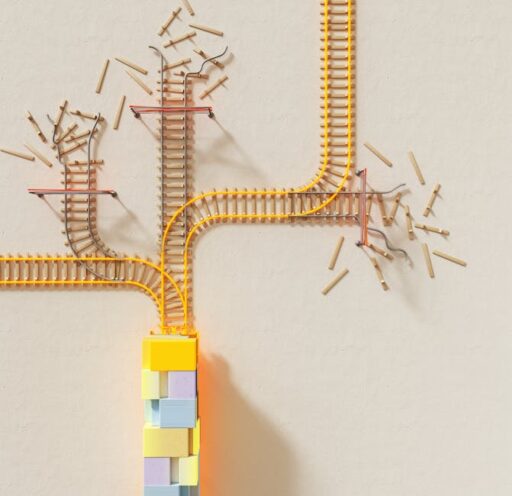

What’s next: combining modes for a hybrid developer experience

The most effective developer platforms will combine modes: a low-latency assistant for day-to-day interactions and a high-capacity model for deep reasoning, audits, and heavy lifting. Codex Spark is an example of the first mode — optimized for speed and interactivity — and sets the stage for hybrid workflows where models are orchestrated based on task complexity.

We can expect further innovations in:

- Client-side and edge inference for private, low-latency features.

- Model orchestration layers that automatically route work between fast and deep models.

- Developer tooling that blends AI suggestions with CI/CD and security automation.

How this fits into the broader agentic coding trend

Codex Spark amplifies an existing trajectory: AI that actively participates in software development rather than passively generating snippets. That trend spans agentic coding tools embedded in IDEs, multi-agent development environments, and platform integrations that turn AI from a suggestion engine into a collaborative partner.

For a deeper look at how agentic systems are reshaping developer workflows, read Agentic Coding in Xcode 26.3: Smarter App Development and Agentic Software Development: The Future of AI Coding.

Adoption checklist for engineering leaders

Use this checklist when evaluating Codex Spark or similar low-latency coding assistants:

- Define clear goals (reduce context switching, speed prototyping, improve onboarding).

- Run time-boxed pilots with representative teams and measure impact.

- Integrate security scanning, linting, and CI hooks for generated code.

- Plan model orchestration for tasks that need deeper reasoning or verification.

- Train developers on prompt design and safe usage patterns.

Conclusion and call to action

Codex Spark underscores a practical truth: not every AI problem needs maximum capacity. By optimizing for latency and interactivity, developers gain a tool that better mirrors live collaboration and rapid iteration. For teams seeking immediate productivity wins, a lightweight, inference-optimized assistant can deliver noticeable returns — provided governance and validation are in place.

Want to stay ahead on agentic coding and infrastructure trends? Sign up for our newsletter and follow our ongoing coverage to learn how low-latency models, hardware integration, and orchestration patterns are reshaping developer tools.

Try it: If you have access to the research preview, test Codex Spark on a small pilot, measure developer flow improvements, and share results with your platform team to plan wider rollout.