Orbital Data Centers: Can AI Shift Compute to Space?

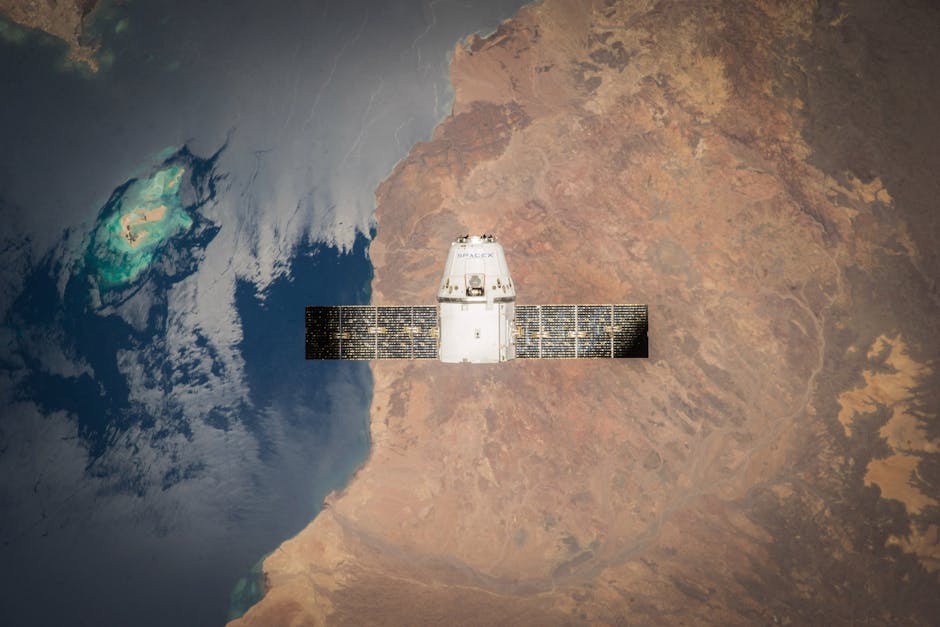

The idea of putting large-scale compute into orbit has moved from science fiction into serious industry planning. Companies are proposing constellations of AI satellites and solar-powered orbital platforms that could shift tens of gigawatts of compute off Earth. The potential upside is enormous — near-constant solar energy, reduced terrestrial footprint, and new architectural options for global, low-latency services — but the engineering, economic and operational barriers are equally massive.

Why companies are chasing space-based compute

Space-based data centers are being pitched as a way to capture several unique advantages of the orbital environment:

- Energy density: Solar panels in orbit can produce multiple times the energy per square meter compared with terrestrial installations, because they avoid atmosphere and weather.

- Geographic neutrality: A well-placed constellation can reach many regions with similar latency characteristics and without the political complications of local datacenter siting.

- Offloading growth pressures: Moving peak compute into orbit could relieve terrestrial grid and real-estate pressures in regions with constrained infrastructure.

All of this has prompted large companies and startups to file plans, design prototypes, and study how to scale orbital compute from tens to thousands — or even millions — of satellites.

What will it take to make orbital data centers cost-competitive?

Short answer: launch costs, satellite manufacturing costs, thermal and radiation engineering, and new operational architectures must all improve substantially.

Launch economics and the Starship inflection point

Getting mass to orbit is the dominant line item in the space compute equation. Current reusable rockets can deliver payloads at a price on the order of a few thousand dollars per kilogram; project studies aiming for orbital data centers assume orders-of-magnitude lower costs to make a plausible business case. That delta is why investors and engineers focus so intensely on next-generation heavy-lift vehicles and full reusability.

Even with a dramatic drop in launch cost, the market dynamics matter. Rocket operators will price missions to capture value and avoid undercutting their own margins. That means launch-price forecasts used in business models must be realistic about commercial pricing behavior, fleet cadence and availability.

Satellite manufacturing and scale

Satellite production is the other major cost driver. Today, high-performance satellites optimized to carry GPUs, power systems and thermal hardware still cost hundreds to thousands of dollars per kilogram. Mass production and component standardization can reduce per-unit cost — the same forces that brought down the cost of communications satellites — but AI-capable spacecraft require larger solar arrays, expansive radiators and robust communications links, which push mass and complexity upward.

Thermal control and energy delivery

Thermal management in vacuum is not free: without an atmosphere, systems must radiate heat to space through large surface areas. Those radiators add mass and surface area that must be manufactured, launched and deployed. Similarly, while solar energy in orbit is more abundant, converting that energy into reliable, long-lived electrical power for racks of GPUs requires solar arrays that can withstand radiation and degradation for years.

Radiation and reliability

Cosmic radiation poses two problems: device degradation over time and transient errors like single-event upsets (bit flips). Mitigations include shielding, rad-hardened components, redundancy and software error-correction — all of which increase cost, mass or both. Designers must balance the cost of radiation hardening against acceptable failure rates and resiliency schemes.

How do space and ground costs compare today?

Independent modeling shows that a 1 GW orbital installation can cost several times the equivalent terrestrial deployment when current launch and satellite manufacturing economics are used. Key assumptions in those models include launch price per kilogram, satellite manufacturing cost per kilogram, system lifetime and the usable energy delivered by the system. Changing any of these inputs can significantly alter the result, which is why so much attention focuses on the trajectory of launch costs and mass production yields.

When does orbit become competitive?

Most studies converge on a few preconditions for competitiveness:

- Launch costs drop to low hundreds of dollars per kilogram at scale.

- Satellite manufacturing costs fall via design simplification and mass production.

- Operational lifetimes are long enough (or refresh cadences fast enough) to amortize capex against delivered compute and energy.

Without those changes, orbital compute is strategically interesting but economically niche.

Which AI workloads make sense to run in orbit?

Not all AI workloads are equally suited to space deployment. Workload selection will likely follow this pattern:

- Inference-first: Low-latency inference tasks that can run on a handful of GPUs are the most plausible early use cases. These include conversational AI endpoints, voice agents, content moderation pipelines and edge-accelerated services.

- Distributed training is hard: Large-scale model training typically requires tightly coupled clusters with very high interconnect bandwidth. Achieving the same coherence across many satellites poses hard engineering problems in synchronization, comms throughput and latency.

- Specialized services: Energy-hungry or location-agnostic workloads that benefit from persistent solar access — for example, long-running geospatial analytics pipelines or certain simulation tasks — could be feasible if economics improve.

Communications and architecture constraints

Terrestrial training clusters rely on very high bandwidth, low latency interconnects. Current laser inter-satellite links can achieve tens to a hundred gigabits per second, but replicating the hundreds-of-gigabits interconnects used inside terrestrial datacenters remains a technical gap. One proposed approach is to cluster satellites very close together so they can use higher-throughput transceivers, but that increases station-keeping complexity and collision-avoidance autonomy burdens.

Engineering trade-offs: energy, mass and lifetime

Designers must make trade-offs between:

- Panel materials (radiation-hardened vs. low-cost silicon)

- Shielding level (mass penalty vs. error rates)

- Radiator size (mass and deployment complexity vs. thermal performance)

- Operational lifetime (longer life raises capex but reduces replacement cadence)

For example, cheaper silicon solar arrays are already common in new constellations but degrade faster in orbit, which shortens satellite lifetime and forces faster replacement cycles. That can make the financial case sensitive to assumptions about technology refresh cycles and the pace of chip improvement.

What are the near- and mid-term milestones to watch?

Several technical and market milestones will determine whether orbital data centers scale beyond prototypes:

- Operational launches of next-gen reusable heavy-lift rockets that materially lower cost-per-kilogram.

- Demonstrations of space-hardened GPU racks or accelerated inference modules operating reliably for years.

- High-volume satellite manufacturing lines that materially lower per-unit cost.

- Inter-satellite laser communications upgrades that push sustained throughput higher without prohibitive power or pointing requirements.

Progress on any of these fronts will move the industry closer to a defensible orbital compute value proposition.

How should enterprises and cloud players prepare?

Enterprises and hyperscalers can take pragmatic steps:

- Model marginal economics for inference workloads versus terrestrial hosting across different geographic regions.

- Invest in software architectures that tolerate intermittent links and transient errors, enabling hybrid ground-orbit deployments.

- Track launch and manufacturing cost curves closely and run scenario analyses tied to realistic price and cadence assumptions.

For a deeper look at how AI infrastructure spending is evolving and the implications for capital allocation, see our coverage of AI data center spending trends and strategic investments in cloud-scale compute.

For analysis focused specifically on orbital architectures and the arguments for moving some AI infrastructure to space, read our feature on why orbital data centers are being considered now.

And for context around how large hardware investments are reshaping the AI infra market, our review of major investments scaling data center capacity is essential reading.

Can orbital data centers deliver a new frontier of compute?

Orbital data centers are not a single technology project; they are a systems-level challenge that requires breakthroughs across launch economics, satellite manufacturing, thermal and radiation engineering, and distributed system design. Inference workloads are the natural starting point because they tolerate more operational noise and require fewer tightly coupled GPUs. Training at scale in orbit remains a difficult long-term research and engineering problem.

At present, orbital data centers look like a plausible complement to terrestrial infrastructure rather than a wholesale replacement. The near-term business cases will prioritize workloads that monetize the unique properties of space: abundant solar exposure, global reach and strategic positioning.

Key takeaways

- Orbital data centers could become economically viable if launch and production costs decline significantly and if satellite lifetimes and reliability meet operational requirements.

- Inference workloads are the most likely early use cases; distributed training at scale will require major advances in inter-satellite networking and synchronization.

- Watch trajectories for launch price-per-kg, satellite manufacturing scale, and advances in space-hardened power and thermal systems.

Next steps and call to action

If you manage cloud strategy, AI infrastructure planning, or engineering roadmaps, now is the time to add scenario planning for space-based compute. Model workloads that could benefit from orbital deployment, invest in resilient distributed architectures, and monitor the supply chain and launch market closely. The question is no longer whether orbital data centers are possible — it’s when they become practical for your use case.

Stay informed: explore our in-depth coverage on orbital data centers, AI data center spending, and major infrastructure investments to track how the market and technology are evolving.

Ready to prepare your infrastructure roadmap for the orbital era? Subscribe for updates and actionable briefings on AI infrastructure trends, or contact our research team to run a custom scenario analysis for your workloads.