Voice AI Interface: How Voice Will Become the Next UI

Voice is rapidly evolving from a novelty feature into a primary human-computer interface. Advances in speech synthesis, emotion modeling, and large language model (LLM) reasoning are converging to produce conversational experiences that feel less like typed commands and more like natural interaction. As voice-driven systems spread into wearables, cars, and ambient devices, architects, product leaders, and policy makers must understand the technical trade-offs, user experience changes, and privacy implications of voice-first design.

What makes voice the next major interface?

Short answer: improved naturalness + deeper reasoning. A new generation of voice models no longer only reproduce human-sounding audio; they combine prosody, emotion, and intonation with LLM-style context and planning. That combination unlocks interactions that are faster, hands-free, and more ambient than tapping a screen.

- Natural expressiveness: Modern speech models capture subtleties like emphasis, pacing, and sentiment, making responses feel human and context-aware.

- Reasoning integration: When voice synthesis is paired with reasoning models, systems can answer multi-step questions and follow conversational context rather than single commands.

- Persistent context: Memory and user preference storage allow interactions to feel personalized across sessions without repeated explicit prompts.

- Form-factor expansion: Voice fits where screens cannot—headphones, smart glasses, cars, and home devices—making it a logical interface for ambient computing.

How are voice models merging speech and reasoning?

Voice intelligence is moving from two separate components—speech synthesis/recognition and separate language reasoning—toward tightly integrated stacks. The result is systems that can understand nuance in spoken requests, maintain context across turns, and craft responses that use tone and timing intentionally.

Emotion, intonation, and meaning

Speech models now control prosody and affect, which influences how users interpret information. A neutral factual reply delivered in a warm, empathetic tone can increase trust and effectiveness in customer support, telemedicine, and coaching use cases. Designers should treat voice as a layered medium: semantic content, pragmatic intent, and vocal delivery are all design variables.

Persistent memory and agentic behavior

Beyond single-turn exchanges, voice systems are adopting persistent memory to maintain preferences, history, and contextual signals. This makes interactions less effortful—users can rely on the system to remember prior choices, routines, and constraints. As agents gain autonomy, platforms must implement guardrails so voice-driven agents act predictably and respect user boundaries.

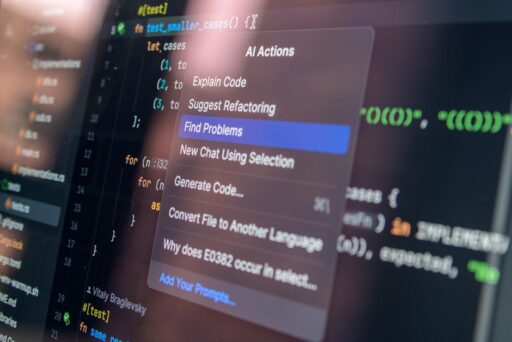

On-device vs cloud: What hybrid architectures look like

High-fidelity voice synthesis and large-context reasoning have historically leaned on cloud compute. But latency, cost, connectivity, and privacy concerns push developers toward hybrid models that combine cloud and on-device processing.

Benefits of hybrid voice architectures

- Latency reduction: On-device models handle immediate responses and noise-robust recognition to keep conversations snappy.

- Privacy-preserving: Sensitive audio and personal context can be processed locally, with selective uplink for heavier reasoning.

- Offline capability: Devices remain functional in low-connectivity scenarios.

- Cost optimization: Distribute inference load to reduce cloud compute bill while maintaining high-quality outputs for complex tasks.

For architecture guidance and examples of on-device efforts, see reporting on on-device AI processors and how voice-specific platforms are evolving, such as the VoiceRun platform for enterprises.

What are the privacy and surveillance risks?

Making voice persistent and embedded in daily life raises acute privacy questions. Continuous listening, long-term storage of voiceprints, and contextual memory create datasets that can reveal sensitive information about health, associations, routines, and locations.

Key privacy concerns

- Continuous capture: Always-on microphones raise the risk of inadvertent recording and retention of private moments.

- Profiling and inference: Voice data and interaction history can be used to build detailed behavioral profiles.

- Data sharing and misuse: Aggregated voice or derived transcripts may be shared, sold, or repurposed in ways users did not anticipate.

Designers must weigh the product benefits of persistent voice context against the moral and regulatory costs. Techniques such as local-first processing, federated learning, and strict data minimization reduce risk. Transparent controls—clear affordances for when the microphone is active and easy access to delete memory—are table stakes.

How should companies prepare for a voice-first future?

Organizations that plan now will gain competitive advantage. Below are practical steps product and engineering teams should adopt:

- Map voice-first journeys: Identify scenarios where hands-free or ambient interaction improves outcomes—driving, fitness, home automation, and accessibility.

- Design for multimodality: Combine voice with visual confirmations or haptic feedback when safety or precision matters.

- Implement hybrid inference: Partition workloads between device and cloud for latency, privacy, and resilience.

- Build transparent controls: Provide clear privacy preferences, on/off toggles, and memory management tools.

- Set voice UX standards: Define tone, fallback strategies, and explicit confirmation patterns for sensitive actions like payments or data sharing.

- Invest in evaluation: Measure task success, user trust, and perceived naturalness with real-world voice testing.

- Plan for regulation: Monitor evolving law and industry guidelines on biometric data, recordings, and consent.

How will hardware change as voice takes center stage?

As voice becomes a primary interface, device design shifts. Headphones, earbuds, smart glasses, and car consoles will integrate always-available microphones, low-power on-device models, and privacy hardware indicators. This creates new opportunities for companies building voice-enabled wearables and consumer electronics.

For coverage of wearables and the business case for voice-enabled glasses, see our analysis of AI smart glasses. Financial momentum behind voice infrastructure is strong; investment trends show robust capital flow into voice platforms and startups (read our coverage of voice AI funding), which accelerates product maturity and hardware integrations.

Will voice replace screens?

No—screens will remain essential for many tasks like visual design, gaming, and detailed data review. Voice will, however, displace many routine interactions and become the preferred medium for ambient, hands-free, and accessibility-focused use cases.

Think of voice as a complementary surface rather than a wholesale replacement. Successful products will be multimodal: voice for rapid, low-friction exchanges; screens for depth, precision, and visual confirmation.

Design principles for trustworthy voice experiences

To build voice-first products users trust, follow these principles:

- Least privilege: Only collect what you need and minimize persistent storage.

- Contextual clarity: Make it obvious when the device is listening and when memory will be used.

- Human-centered tone: Choose voice affect to match user needs—empathy for healthcare, brisk clarity for task completion.

- Fail-safe confirmations: For high-risk actions, require explicit confirmation or multimodal verification.

- Auditability: Allow users to review and delete audio and derived data easily.

Opportunities across industries

Voice-first interfaces unlock value in multiple sectors:

- Healthcare: Hands-free charting, patient triage, and empathetic coaching.

- Automotive: Safer in-vehicle controls and contextual route guidance.

- Enterprise productivity: Ambient assistants that summarize meetings and surface action items.

- Accessibility: Improved access for users with visual or motor impairments.

- Consumer devices: Wearables and home assistants that reduce friction for everyday tasks.

How to evaluate voice platform vendors

When selecting voice stack partners, evaluate these dimensions:

- Audio quality and expressiveness: Can the model control prosody and emotion?

- Context and memory management: Does the platform support scoped, auditable memories?

- On-device footprint: Are there compact models for local inference?

- Security and privacy features: Encryption, local processing, and deletion APIs.

- Integration capabilities: SDKs for mobile, wearables, and cloud pipelines.

Conclusion

Voice AI interfaces are not a fleeting trend—they represent a fundamental shift in how humans will interact with intelligent systems. By combining expressive speech models, persistent context, hybrid architecture, and careful privacy design, builders can deliver experiences that are faster, more natural, and more ambient than screen-first approaches. But adoption requires rigorous attention to user control, auditability, and regulatory alignment.

If you’re building voice features or planning product strategy, start with clear use cases, prioritize hybrid privacy-preserving architectures, and test with real users in everyday environments. The companies that get voice UX, memory management, and trust right will lead the next wave of ambient computing.

Call to action: Ready to design voice-first experiences that users trust? Subscribe to Artificial Intel News for weekly analysis, and download our checklist for building privacy-first voice products to get started.